Optimizing Data Collection Costs: A Comprehensive Guide

Expert corner

Zach Quinn

In 2024, data creation growth is projected to reach nearly 150 zettabytes –or 150 million petabytes. Even though analysts have repeatedly argued data is the new oil, few realize that, like oil refinement, data collection comes with its own hefty price tag.

Data collection costs creep up for two main reasons – technical and human. Both technical capabilities and human labor are fixed resources that cannot be scaled infinitely.

Technically, more data collection means a greater dependence on computing power like CPUs and data storage infrastructure. The human cost for maintaining these resources includes both the physical labor accompanying development and ongoing maintenance, as well as the time required to source talent and assemble technically capable teams.

Even with rapidly advancing data infrastructure solutions, optimizing data collection costs remains a challenge for organizations of any scale. Therefore, this guide will suggest strategies you, the data-minded decision maker, can implement to reduce data collection costs at every juncture of the data mining life cycle –from extraction to the final stage of long-term storage.

What Factors Increase Data Collection Costs?

The greatest contributing factor to rising data collection costs is the capital associated with building custom data pipelines and internal reporting solutions. When initially developing data collection mechanisms, companies will rely on out-of-the-box tools like Google Analytics , which offer both free and low-cost monthly usage tiers; therefore, certain low-complexity solutions do not require a significant financial investment.

For organizations whose data is stored by various platforms, it is often necessary to extract data from the back end of these services through a custom API integration . APIs will typically use volume-based (e.g., per request, per line of data delivered, etc.) pricing, which can quickly incur major costs for any large business.

Organizations that opt to use third-party web scraping tools will also find that costs can rise steadily as more and more data is mined from target sources, making it imperative to optimize these costs as quickly as possible. Web scraping success rates, number of CAPTCHAs, number of IP addresses banned, and many other factors play into data collection costs associated with these out-of-the-box solutions.

Conducting best data management practices can significantly reduce costs as well. While data management is not directly correlated with data acquisition costs, a solid data management framework will minimize the number of errors, duplicate information, and other issues that may negatively impact data collection.

Consequently, significant time needs to be invested in performing proper data cleaning and enforcing data management policies in order to transform the raw data, which often arrives in an unstructured form from the web, into a usable, monetizable final form. When estimating data collection spending, it is necessary to consider the cost of properly processing and mining the extracted data.

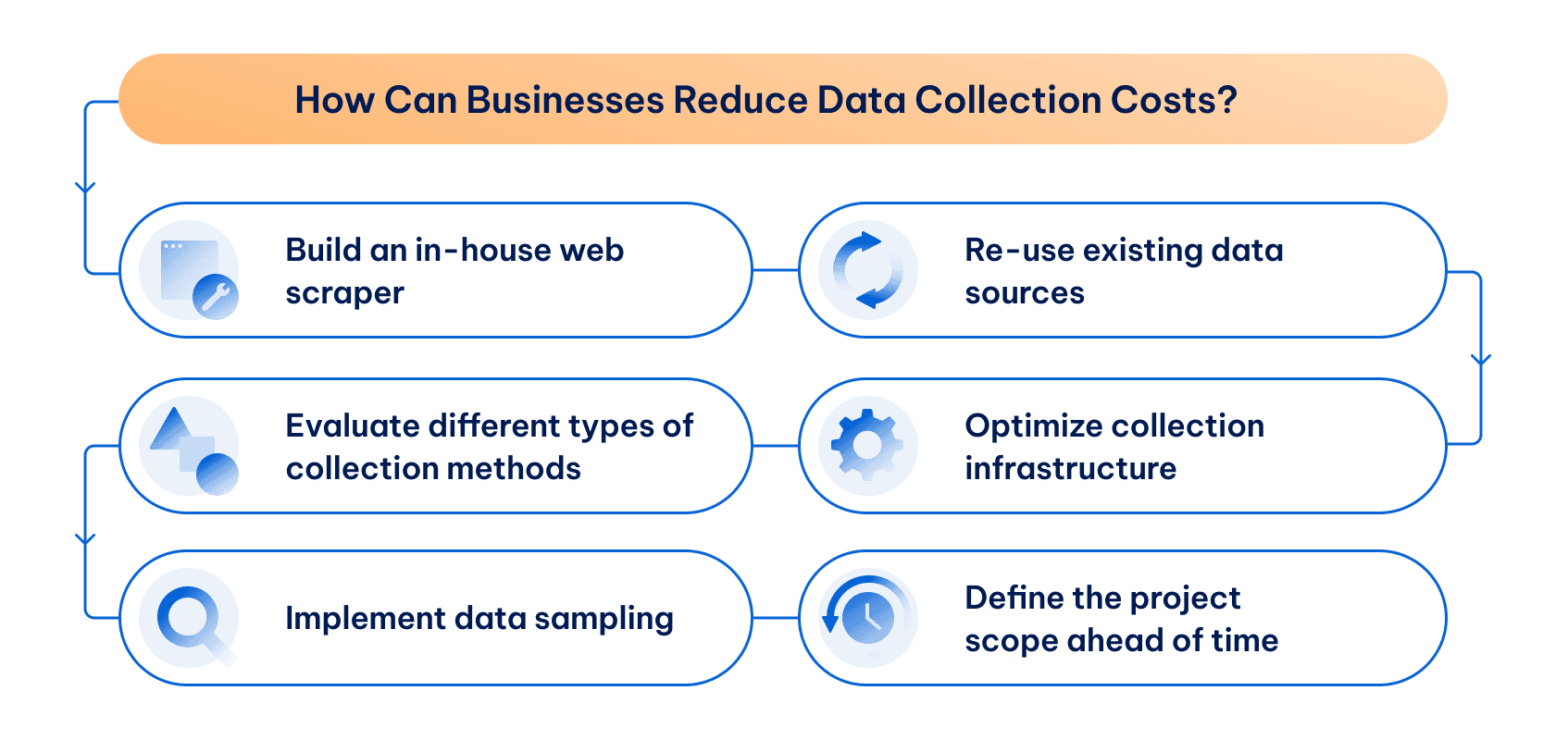

How Can Businesses Reduce Data Collection Costs?

The best data collection operations craft methodologies to extract large volumes of data while keeping costs reasonable. Organizations that are reaching later stages of data maturity that might be looking to use the extracted data to fuel ML models or AI integrations know that the monetary benefit of these tools makes short-term data collection costs worth it.

Even with the prospect of high data collection costs, successful companies in this space take immediate, thoughtful steps to own as much of the data extraction process so they don’t need to pay for costly vendors offering solutions that might not fit their businesses.

Build an In-house Web Scraper

If your business collects data from third-party public sources, building a web scraper in-house , while costly in the short term, can decrease costs over time. While buying web scraping services from another company offers a quick start-up and results, these costs will almost always be higher. After all, the web scraping company has to make money on the transaction.

Building a web scraper in-house, however, will definitely take time, labor, and buy-in from leadership. Creating a scalable, optimized web scraping script requires technical skill along with a product team that can find ways to deploy the product in a way that increases revenue.

Skilled developers, both contract and full-time employees, are in demand and often require high salaries in exchange for work on such projects. However, a purposeful implementation will create profit in excess of these costs and, in several months or years, drive considerable revenue.

Re-use Existing Data Sources

Prioritizing data sources will help guide your investment in data collection tools and the teams that support them. Since businesses will often have numerous sources of data, some of which may overlap, it is important to conduct an inventory of available data in order to reduce data redundancy and prevent duplication.

Smart data teams understand the available data before working to extract new data and, by extension, incur more costs and require more time investment from in-house employees and third parties.

Evaluate Different Types of Collection Methods

Just as you took inventory of the data you already have access to, it is important to preemptively examine the methods of data collection available to your organization and to conduct a thorough cost-benefit analysis before committing to any one method.

Sometimes, the same type of data can be collected through a multitude of methods – dataset providers, web scraping, or APIs all at once. Remember, if you already have or plan to develop in-house web scrapers, in the long run, the cost of data collection will often be the lowest with this data collection method.

Otherwise, compare all of the methods based on price and obstacles to implementation. Sometimes using third-party technologies integrated with APIs will be cheaper than commissioning and deploying web scrapers by virtue of extremely low implementation requirements.

As you conduct this evaluation, it is critical to ensure the data returned is timely, accurate, and relevant to organizational stakeholders.

Optimize Collection Infrastructure

Collecting data involves many moving parts and a robust infrastructure to be put into place. At a minimum, to sustain growth and the scaling of your data collection operation, you’ll need to consider creating a data warehouse and utilizing proxies .

A data warehouse provides a centralized repository or “home” for all data collected through your chosen data extraction method. This data storage framework also facilitates opportunities to “enrich” data sources by combining data to arrive at more nuanced insights.

While proxies are a vital part of web scrapers, they can also quickly incur costs. Picking a provider with competitive pricing for your web scrapers can significantly reduce data acquisition costs.

Data warehouses and data management infrastructure can also be closely tied to acquisition costs. You may be, for example, storing lots of duplicate entries from many or even a single data source. Implementing data cleaning practices won’t immediately reduce the cost of data collection, but it will still make the entire process more profitable.

Implement Data Sampling

Many business decision-makers will focus on collecting as much data as possible and worrying about resolving data quality issues downstream. However, the adage “garbage in, garbage out” applies here; data collection doesn’t have to be perfect, but collecting useless data will only increase the cost and lead to bloated data warehouses.

Implementing samples with controls for confidence and statistical significance will significantly reduce the amount of information that needs to be collected and, in turn, reduce the cost of data collection. Sampling is exceptionally common with data collection tools like surveys, but it often doesn’t get applied to web scraping and other practices, even though there is considerable benefit to doing so.

Define the Project Scope Ahead of Time

You would be surprised by how many companies start without defining data sources and the scope of the project beforehand. Consequently, the data collection process has no defined start and end dates, inevitably resulting in overcollection and the accumulation of “junk” data.

Collecting data with clear boundaries will not only reduce the duration of the process but will also make the teams responsible for the project a lot happier. Proper data management and project scopes allows teams to define clear goals and set attainable KPIs , which is essential to maintaining and monitoring performance.

Estimating the Cost of Data Collection

If you define the project scope beforehand, you’ll have a pretty good understanding of how much data is required to achieve your desired end result. Additionally, if data sources are known and validated, all of these factors can be used to estimate the cost of the project with greater accuracy.

A helpful formula to consider might be:

The first two parts are applicable to nearly any type of data collection. If you use APIs, for example, you’ll likely have a fixed amount of money you need to spend to implement the project.

Web scraping is a little bit different as it’s harder to estimate the cost of data collection, especially if you aggregate data from several different sources. Each source will have specific anti-bot technologies implemented and different parameters for web scraping defined in the site’s robots.txt file , which can complicate automated data collection efforts.

Therefore, it’s best to use historical information to estimate a difficulty weight and then apply the formula for each source in the project. While these weights will be averages, you’ll be able to estimate the cost of data collection with a higher degree of certainty and, by extension, be able to provide more accurate figures to organizational stakeholders and leadership.

Conclusion

Any business that aims to collect data at a large scale must envision a process that is purposeful, optimized, and cost-efficient. While in the early stages of building your organization’s data collection infrastructure, it is important to resist being tied to a singular ingestion solution.

Different kinds of data and different vendor sites require tailor-made solutions. Most likely, your data collection mechanism will involve a mixture of third-party or out-of-the-box solutions like Google Analytics, custom integrations with public APIs and, possibly, automated web scraping bots .

Diversifying your data collection methods allows your organization to avoid over-committing to a technology or methodology that could become inefficient or difficult to maintain long-term.

Even after a solution is implemented, it is important to consistently monitor and evaluate whether an implementation is both cost-efficient and creating revenue or empowering stakeholders to reach their department’s quarterly KPIs.

Being flexible in how to collect, store, and manage data is how an organization effectively scales data infrastructure and moves into later stages of data maturity , at which point it can use collected data to fuel more complex technologies like AI.

No matter which data solution you choose to develop and implement, it is important to remember that data collection is an ongoing process. Vendor or website changes can break even the most robust data pipelines and render a current solution unusable. Creating a sustainable data collection operation requires the right mix of technology and capable teams that can consistently mine and refine the “new oil.”