VBA Web Scraping: Extracting Data with Excel VBA

Tutorials

Justas Vitaitis

Web scraping is the process of extracting HTML files from websites and then searching through them to find valuable information. While most frequently performed through Python-based scripts, plenty of other options are available.

One of those options is Visual Basic for Applications. It’s the internal programming language for Microsoft Office applications , most widely used for Excel. Users can write various scripts that automate repetitive tasks and output actions directly into the Office suite.

Since Excel is a great tool for numerical data analysis, combining it with VBA makes it a powerful tool for scraping websites. Additionally, you don’t need to work with various additional libraries that would create files and format them – VBA web scraping delivers data directly to Excel.

How to Get Started with VBA Web Scraping

Since VBA is disabled by default, you’ll need to perform some preparatory steps to get access to the programming language:

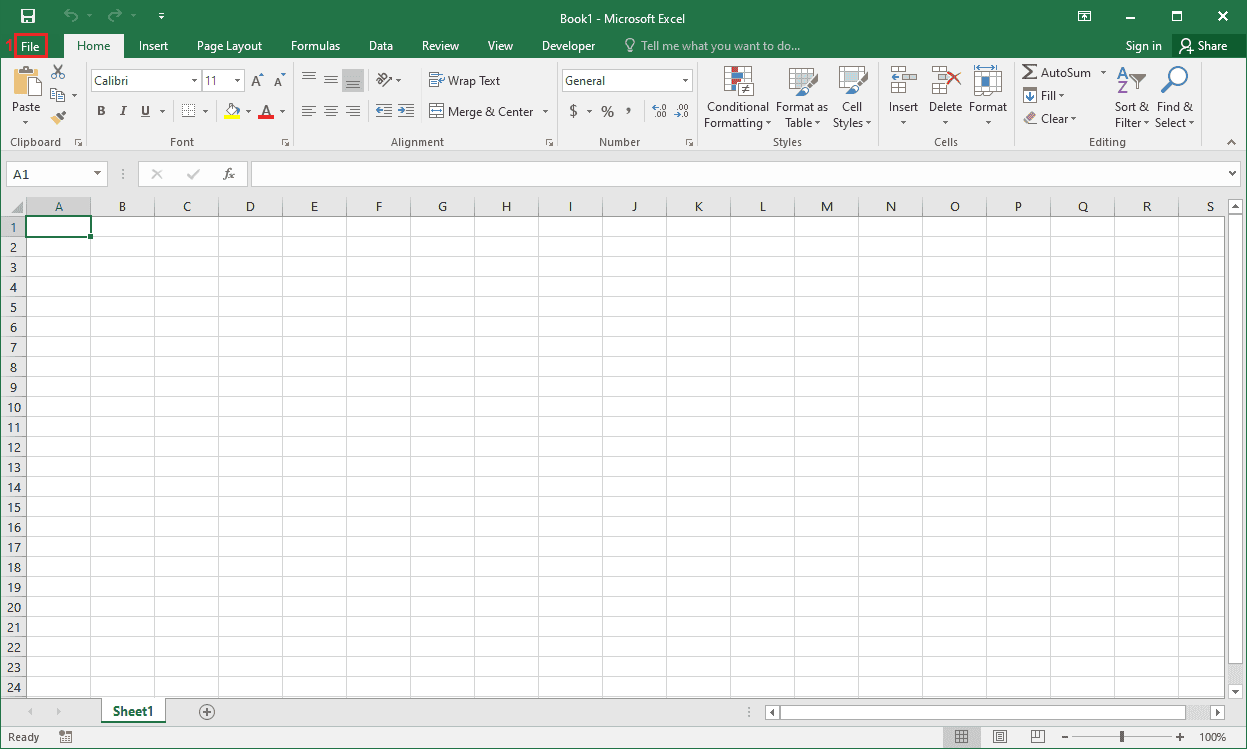

1. Open up an Excel file and click on File (1) .

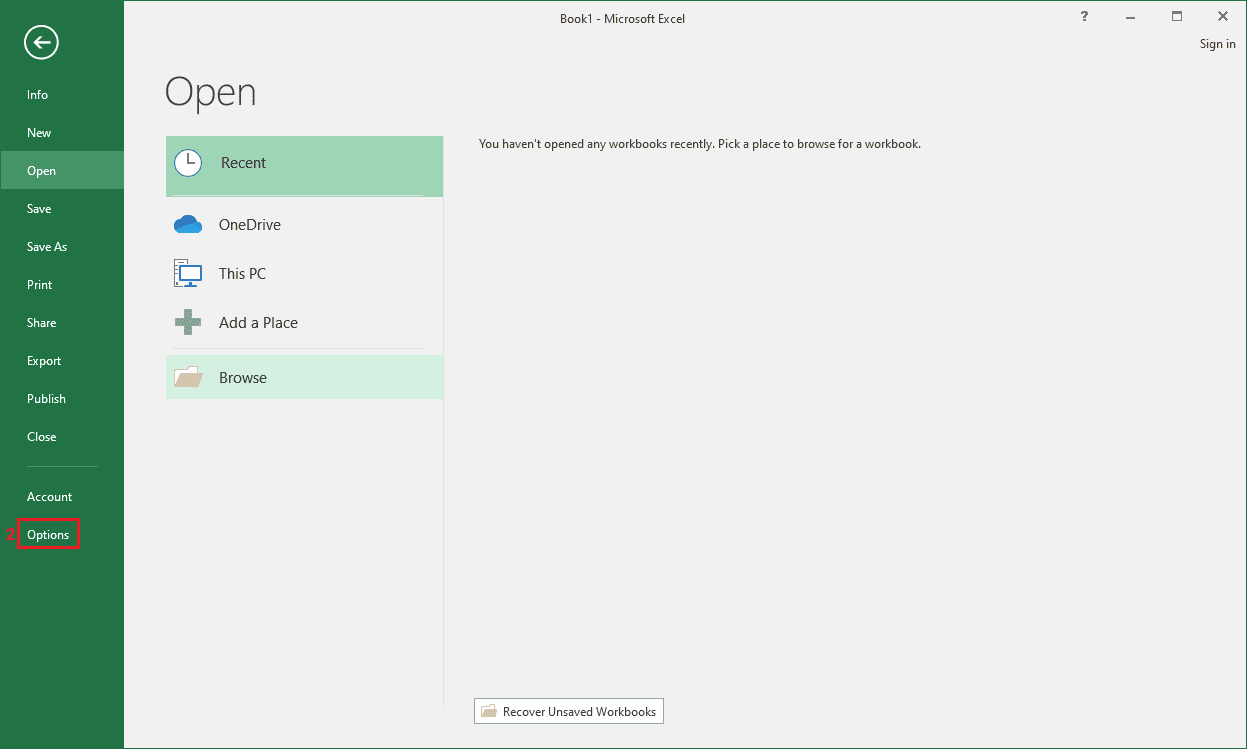

2. Click on Options (2) at the bottom of the side menu.

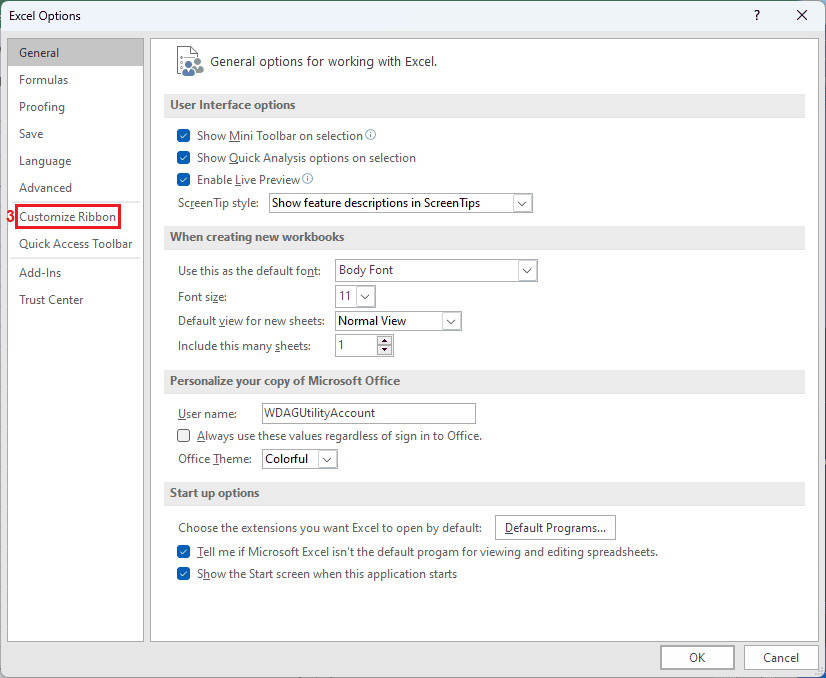

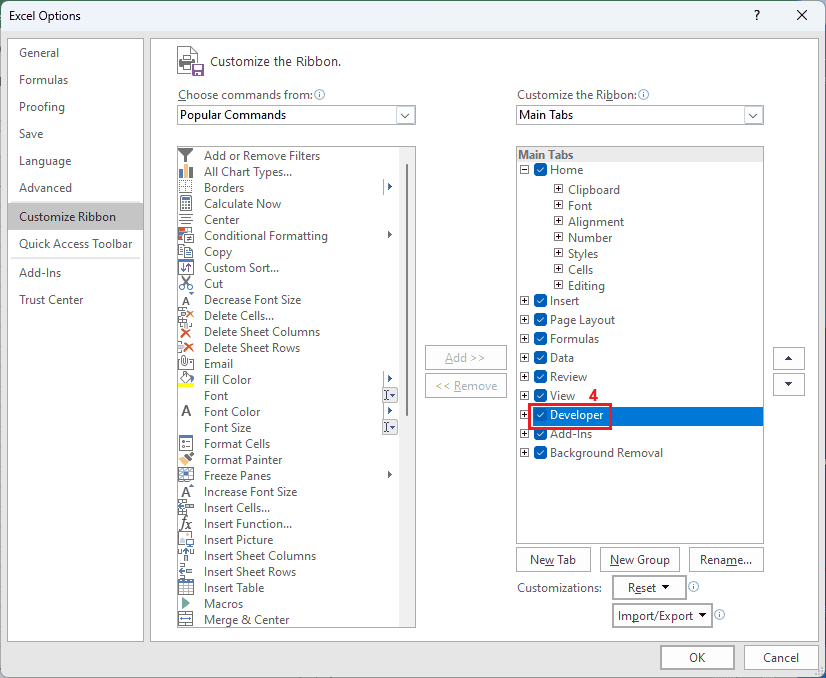

3. Click on Customize Ribbon (3) .

4. On the right-hand tab, scroll down to the check box Developer (4) and check it.

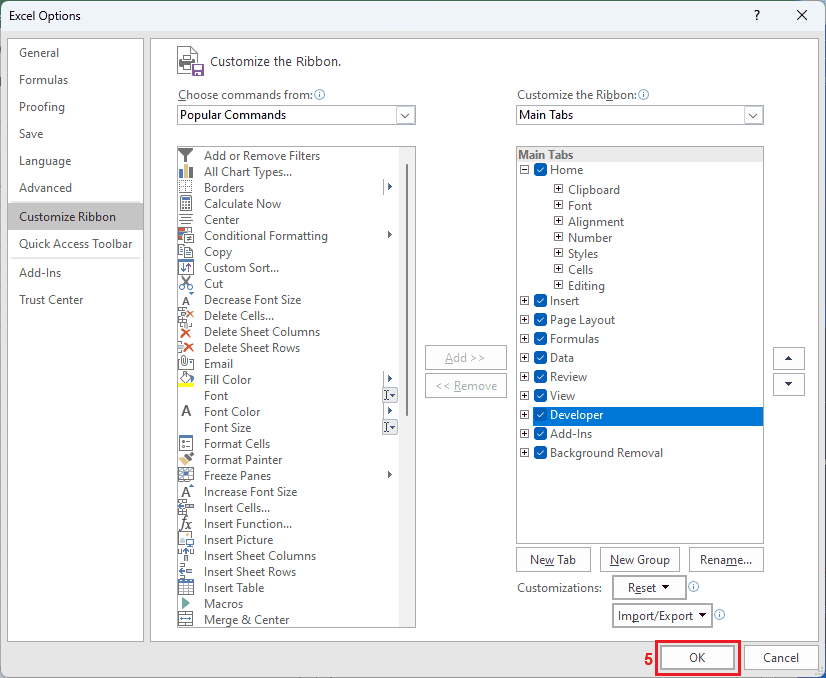

5. Click OK (5) .

A new menu will appear at the ribbon (top part of the Excel file), called “Developer”. While you can do a lot of things with the Developer menu, we’ll be using it to build our VBA web scraping script.

Once you open up the ribbon, there’ll be a Visual Basic button visible at the top left. Click on it to open to start using VBA.

Clicking on the button will open a completely new window. That’s your development environment for VBA web scraping. Like with any programming language, there’s some preparatory work for every project.

First, we’ll need to import references (libraries, packages, or modules in other programming languages) to get the necessary functions for our Excel web scraping tool.

Click on Tools and then on References . Click the checkmark on these two libraries: Microsoft HTML Object Library and Microsoft Internet Controls . Finally, click OK . Note that you can press M on your keyboard to instantly scroll down to the first reference that starts with an “M”.

Basic Concepts of VBA Web Scraping

The two references you’ve imported are the tools we’ll need to visit web pages and to scrape data from them. HTML object library allows VBA to interact with HTML files in various ways such as selecting the entire document or a part of it.

Microsoft Internet Controls, on the other hand, gives you access to Internet Explorer. While it’s certainly not the most efficient browser, you get full control over automating Internet Explorer, such as creating instances, visiting URLs, and interacting with elements.

Both of these references are vital to Excel web scraping. You wouldn’t be able to perform data extraction without them as there would be no way to visit URLs or interact with HTML.

We’ll also get two opportunities to scrape data. Our references allow us to automate Internet Explorer, which is better for more complicated websites. There’s also the XMLHTTP module (akin to Python Requests library ), which can send HTTP requests directly to websites without creating a browser instance.

How to Write Your First VBA Web Scraper

Head back to your Excel spreadsheet and create a new tab while giving a name (“Test” works fine). It should now appear in the VBA screen as “SheetX (Test)”.

Right click on your sheet, select Insert and click on Module . That should open a new window that’s akin to a programming interface in other IDEs. Now we can begin writing code.

We’ll start by creating a script that uses Internet Explorer to visit a website and download the HTML.

Sub ScrapeWebsiteUsingIE()

' Declare a variable to hold the Internet Explorer application object

Dim ie As Object

' Create an instance of Internet Explorer

Set ie = CreateObject("InternetExplorer.Application")

' Make the Internet Explorer window invisible (set to True if you want to see the browser)

ie.Visible = False

' Navigate to the specified URL

ie.navigate "http://iproyal.com"

' Wait for the browser to complete the loading of the web page

Do While ie.Busy Or ie.readyState <> 4

' DoEvents allows the operating system to process other events while waiting

DoEvents

Loop

' Declare a variable to hold the HTML document object

Dim html As Object

' Set the HTML document object to the current document in Internet Explorer

Set html = ie.document

' Declare a variable to hold the specific element we want to extract data from

Dim element As Object

' Find the HTML element by its ClassName (change text in parentheses to the actual class name of the element you want)

Set element = html.getElementsByClassName("mt-16 sm:mt-24 text-center lg:text-left tp-headline-m lg:tp-headline-xl")

' Reference the active sheet

Set ws = ThisWorkbook.Sheets("Test") ' Adjust as necessary if you want a specific sheet

' Set starting row

Dim startRow As Integer

startRow = 1

' Check if any elements were found

If element.Length > 0 Then

Dim i As Integer

For i = 0 To element.Length - 1

' Check if the element supports innerText property

On Error Resume Next

Dim elementText As String

elementText = element.Item(i).innerText

If Err.Number <> 0 Then

Err.Clear

' Try another property if innerText fails

elementText = element.Item(i).outerText

End If

On Error GoTo 0

' Output the inner text of each element if it was successfully retrieved

If elementText <> "" Then

Worksheets("Test").Cells(startRow, 1).Value = elementText

startRow = startRow + 1 ' Move to the next row

Else

ws.Cells(startRow, 1).Value = "Element does not support innerText or outerText."

startRow = startRow + 1 ' Move to the next row

End If

Next i

Else

ws.Cells(startRow, 1).Value = "No elements found with the specified class name."

End If

' Quit the Internet Explorer application

ie.Quit

' Release the Internet Explorer object

Set ie = Nothing

End Sub

While the comments are mostly self-explanatory, here’s a quick rundown of the process our VBA code for web scraping goes through:

- We create an object that holds a headless Internet Explorer instance.

- Our IE instance heads over to the specified URL and waits until loading is complete.

- We then create an object that stores the HTML file and create an element object where we’ll store specific data. We find that specific data by searching the HTML file for a specific class name.

- Since we’ll want to output the data to our worksheet, we create an integer value for a starting row. That way we can increment it later on if there’s more than one data point.

- We then create a loop if more than one element is found in order to extract all of the data in sequence.

- Anything that’s found is turned into a string. Additionally, we use both innertext (only the textual information without HTML code) and outertext (both textual and HTML code information) to look through the element.

- Finally, we check if our element is not empty. If it’s not, we output the first value found and increment our startRow integer.

- After that, we quit Internet Explorer and the code itself.

Once the web scraping script is complete, you should get the H1 heading from IPRoyal’s homepage output into your first Excel row and column.

Using XMLHTTP for HTTP Requests

Instead of using Internet Explorer to perform Excel web scraping, we can send HTTP requests directly to the website. Doing so is often much faster and efficient, however, it also gets blocked more frequently, so it’s a trade-off.

Sub ScrapeWebsiteUsingServerXMLHTTP()

' Declare a variable to hold the ServerXMLHTTP object

Dim xml As Object

Set xml = CreateObject("MSXML2.ServerXMLHTTP.6.0")

' Make an HTTP GET request to the specified URL

xml.Open "GET", "http://iproyal.com", False

xml.send

' Wait for the request to complete

Do While xml.readyState <> 4

DoEvents

Loop

' Check if the request was successful

If xml.Status = 200 Then

' Create an HTMLDocument object to parse the response

Dim html As Object

Set html = CreateObject("HTMLFile")

html.body.innerHTML = xml.responseText

' Declare a variable to hold the specific elements we want to extract data from

Dim elements As Object

Set elements = html.getElementsByClassName("mt-16 sm:mt-24 text-center lg:text-left tp-headline-m lg:tp-headline-xl")

' Set starting row

Dim startRow As Integer

startRow = 1

' Check if any elements were found

If elements.Length > 0 Then

Dim i As Integer

For i = 0 To elements.Length - 1

' Check if the element supports innerText property

On Error Resume Next

Dim elementText As String

elementText = elements.Item(i).innerText

If Err.Number <> 0 Then

Err.Clear

' Try another property if innerText fails

elementText = elements.Item(i).outerText

End If

On Error GoTo 0

' Output the inner text of each element if it was successfully retrieved

If elementText <> "" Then

Cells(startRow, 1).Value = elementText

startRow = startRow + 1 ' Move to the next row

Else

Cells(startRow, 1).Value = "Element does not support innerText or outerText."

startRow = startRow + 1 ' Move to the next row

End If

Next i

Else

Cells(startRow, 1).Value = "No elements found with the specified class name."

End If

Else

MsgBox "Failed to retrieve the web page. Status: " & xml.Status

End If

' Clean up

Set xml = Nothing

End Sub

Most of the VBA code for web scraping remains nearly identical with the exception of sending the request. Instead of creating an Internet Explorer instance, we create a ServerXMLHTTP object to send a GET request to our URL. To avoid any errors, we check for a successful response and then store the text of the HTML file into an object.

Advanced Techniques in VBA Web Scraping

Using VBA isn’t limited to just the inbuilt references. Just like with any other programming language, you can find third-party references that’ll allow you to greatly expand upon VBA web scraping capabilities.

One such popular option is integrating Selenium into your VBA web scraping project. Selenium allows you to automate more efficient and popular browsers such as Chrome and Firefox. You’ll need to download install SeleniumBasic , and find the appropriate web drivers to get Selenium running on your VBA web scraping project.

Another common issue you’ll run into is dynamic content. Luckily, VBA can handle these issues rather easily. We’ve actually used one of the functions DoEvents in our VBA web scraping scripts – using loops and DoEvents allows you to wait for pages to fully load. Include interactions that would load more dynamic content, and you’re done.

Finally, you can also parse data using VBA. In fact, it’s rather handy as it includes both web scraping and parsing features within the programming language. You can also do some parsing within the Excel file itself.

There are plenty of VBA web scraping and parsing functions you can use. Some of the popular options are getElementsByTagName, getElementById, and getElementsByClassName. Each of these lets you parse the HTML file and find specific elements. Using innerText or outerText can also provide you with more customization options when using VBA for web scraping.

Conclusion

VBA web scraping can be incredibly useful as it automatically combines web scraping, parsing, and data analysis capabilities into one singular package. While VBA web scraping isn’t as popular, it’s a good option for smaller projects or smaller teams.

Additionally, it’s fully supported and built into the Microsoft ecosystem, so there are plenty of other integrations and capabilities. So, learning VBA web scraping is definitely a good option for nearly anyone. The only drawback is that VBA web scraping requires you to learn a programming language that is less used and supported.