Web Scraping Examples: Unlock Data from Any Industry

News

Justas Palekas

The process of collecting data from websites using various tools and bots is called web scraping. Many companies and individuals utilize web scraping as a means to access helpful info about specific topics, industries, and audience groups.

The easy access to large amounts of information helps users shape a better business strategy and analyze the competitors. Nowadays, web scraping is widely used across many industries, such as finance, real estate, e-commerce, marketing, and more.

In this article, we’ll introduce various web scraping examples and use cases.

What Is Web Scraping?

Web scraping is the act of extracting data from web servers. Web scrapers usually try to employ various tools and bots to gather data efficiently. The tools depend on the website, which is the target of data scraping.

Some modern websites utilize JavaScript to load dynamic content. Therefore, a web scraper should employ the appropriate tools for this type of content, such as headless browsers. Moreover, some websites attempt to block web scraping activities, and this has become the biggest challenge of web data extraction, hence the need for advanced tools.

Although most web scraping is done automatically these days, there are web scrapers out there that choose to do it manually. Manual web scraping involves someone manually copying and pasting the data from the websites, so it’s pretty time-consuming and it’s not feasible for large-scale data scraping.

However, manual scraping usually costs less and doesn’t require much technical knowledge, so it can be a good option for small tasks. On the other hand, automated web scraping is ideal for ongoing and complex data extraction projects, as it’s very efficient and scalable.

Benefits of Web Scraping

Web scraping is the number one answer to bulk data collection. Companies can access large volumes of data in a convenient time frame thanks to the automatic tools for data collection. After the data is gathered from the target website, businesses and individuals will parse and store it efficiently to get the most out of it during the analysis phase.

There are many automatic tools and bots that not only handle web scraping tasks but also help with quickly storing and analyzing the scraped data. Of course, you can create your own tools and program them in a way that benefits you the most.

The automatic nature of most web scraping tools keeps this way of data collection very cost and time-efficient. Using web scraping software and bots, you don’t need to be involved in the process of data collection. You just request the data and choose the best tools for your specific web scraping needs.

Then, the advanced web scraping solutions will provide you with information in various formats, such as Excel files, JSON files, SQL databases, and more.

Web Scraping Examples by Industry

E-commerce

Web scraping is very popular in the field of e-commerce. Various businesses, small or big, extract data from the competitor’s websites to analyze their strategies and compare the prices. Gathering the price data helps companies offer the best price based on market standards and gain the upper hand in the competition.

For example, a company offering security solutions should always be aware of the pricing of their competitors’ services and analyze their strategies regularly.

However, web scraping in the e-commerce industry is not limited to competitive goals. Many businesses attempt to gather information about their products and services. Scraping data related to customer reviews and ratings of a specific product or service helps businesses achieve a general understanding of their product appeal to different audience groups.

Moreover, it can be beneficial for checking product availability on various websites and retailers and tracking the inventory of products. Amazon is a good example of a huge e-commerce company that utilizes web scraping to monitor products and trends in multiple markets.

Real Estate

Web scraping is truly beneficial for real estate agencies and platforms. They gather property listings from real estate websites to create or update a database with a wide range of properties that allows the customers to find the appropriate options based on their conditions.

It’s also useful for market trend analysis as real estate agents and investors can find the changes in the value of certain properties in specific areas and build their strategy around them.

Like many other industries, scraped data can generate leads for agents by providing information about people who are searching for properties or have a property to sell. Rental price comparison is another use case for scraping data that helps landlords and property managers compare prices in various areas and offer competitive prices.

Investors and renters can use the same method to find the best property in a specific area. Many investors gather historical property data to recognize long-term trends and make informed investment decisions.

Digital Marketing and SEO

There are many examples of web scraping in the digital marketing and SEO fields. Many SEO specialists scrape data to find the most popular keywords for their content. They also take advantage of it for backlink extraction from the competitors’ websites to identify beneficial domains that help search engine optimization.

In addition to that, most website owners take advantage of web data scraping for content performance tracking. Scraping search engine result pages (SERPs) can lead to a better understanding of rankings for specific keywords.

Scraping engagement data from viewers and customers can guide website owners in finding the best topics for their content to resonate with their target audience. The engagement data includes likes, comments, or shares on articles or blog posts.

Using the same approach for social media platforms is wildly common, too. Business owners can web scrape various platforms such as Instagram, X, and Facebook to track the social media activity of their customers and understand general sentiment towards them and their products.

Finance and Investment

Financial markets are among the most profitable use cases for web scraping. For example, scraping data from the stock market with the intention of analyzing trading volumes, market indices, market sentiment, and historical stock prices is a common act in financial firms. Investors and analysts also use web scraper bots to gather financial news to stay informed and identify emerging trends.

Moreover, due to the high volatility of cryptocurrency markets, web scraping is extensively used to monitor prices and make quick decisions. To have a general grasp of overall economic trends, web scraping data from economic indicators such as GDP, unemployment rates, and more is very common too.

In addition to that, some investors try to data scrape Securities and Exchange Commission (SEC) filings and reports to analyze various companies’ financial performance.

Academic Research

Not all web scraping is done for profitability. Nowadays, data collection for the purpose of academic research can be done on a larger scale and more quickly than ever before. Researchers usually scrape web data from multiple sources, such as online databases and academic journals, to reach a conclusion.

One of the common web scraping examples of this is gathering data for public health studies. In this case, researchers usually target forums and health-related websites to collect behavioral data on smoking, diet, exercise habits, and more.

Other web scraping examples in this field include collecting articles from academic databases for literature reviews, analyzing scientific publication trends to identify emerging trends and popular research topics, and more. Data scraping is also used for public sentiment analysis on many topics. This helps to understand public opinion and study social dilemmas.

Travel and Hospitality

Web scraping is highly effective for many tasks related to the travel and hospitality industry. One of the most common examples in this industry is gathering data on hotel and flight prices to find the best deals.

Many travel agencies collect event and festival data alongside hotel and flight prices to offer more comprehensive travel packages. On top of that, they can collect weather data from related websites to plan their campaigns based on weather predictions.

In other cases, travel businesses usually collect and monitor travel reviews to understand the sentiments around various services and compare the reviews with other competitors. Overall, competition plays a huge role in this industry, and web scraping can be very beneficial for analyzing competitors’ pricing structures in many areas, such as travel packages, hotels, car rentals, flights, and more.

Media and Entertainment

The media and entertainment industry is constantly evolving, so web scraping is essential to track trends in content consumption. Many movie and TV show platforms such as Rotten Tomatoes, IMDb, and Metacritic offer huge amounts of data related to critic and audience ratings and reviews. Web scraping of this kind of data can be quite valuable for production companies and streaming services.

Speaking of streaming services, nowadays many streaming platforms such as Hulu and Netflix are competing with each other over the consumers’ attention. These companies regularly scrape data from their competitors’ websites and platforms to analyze their performance and create a content strategy based on what is available on the other streaming services.

Moreover, web scraping social media websites makes it easier for companies to analyze buzz for new releases and measure the success of marketing campaigns.

Advanced Web Scraping Techniques

Users need to take advantage of advanced techniques to experience a satisfying web scraping process devoid of any hiccups, such as IP blocks and cluttered data. Many web scrapers use APIs to reduce the complexity of the info extraction process and achieve well-organized data. Web scraping APIs (Application Programming Interfaces) are specialized tools that allow software components to communicate with each other. This provides structured data that is easier to parse and analyze.

Many websites employ advanced anti-scraping measures, including CAPTCHAs and IP blocks, to stop automatic bots from accessing their content and overwhelming their servers. Users can take advantage of CAPTCHA-solving services to get past these puzzles. The easiest way to prevent IP blocks is to use high-quality residential proxies with rotating IPs that make it seem like the IP is coming from a real user.

Handling dynamic content from various modern websites that utilize JavaScript is another challenge of web scraping. These websites don’t offer full data on the initial HTML page. Hence, web scrapers often use browser automation tools such as Puppeteer and Selenium, which can execute JavaScript and provide dynamic content for scraping.

Legal and Ethical Considerations

Web scraping public data is legal, but some websites prohibit it. So before starting web scraping you should check the Terms of Service of the target website and see if copyright laws protect it. Violating these terms can lead to legal problems.

Moreover, there are some data protection laws in various regions you need to be aware of. For example, the General Data Protection Regulation (GDPR) governs the handling of personal data in the EU.

To minimize the possible legal and ethical issues , we recommend these practices:

- Compliance with Terms of Service (ToS) of websites

- Avoid overloading servers using rate limiting on scrapers

- Only extract publicly available data and respect users’ privacy

- Don’t use the collected data for spamming or any other malicious purposes

- Avoid using malicious bots

If you pay attention to legal battles from the past, you can learn a lot about web scraping and its regulations. For instance, the legal battle between LinkedIn and hiQ Labs ended in favor of hiQ Labs because the company only scraped publicly available data on LinkedIn’s website.

Another example is the case between Facebook and Power Ventures which ended in favor of Facebook because Power Ventures violated Facebook’s terms of service and continued to web scrape after receiving the cease-and-desist letter. It was considered unauthorized access which is in violation of the CFAA (The Computer Fraud and Abuse Act) federal law.

Best Web Scraping Tools

These days, you can find many web scraping tools for different use cases that make the process of data collecting very easy and scalable. Here are 3 of the best web scraping tools available:

Scrapy: Best for Developers

Scrapy is an open-source Python framework for large-scale web scraping. It is a highly customizable and flexible tool that can handle complex websites with dynamic content . Furthermore, it’s one of the fastest web scraping tools available. Due to its customizable nature, Scrapy is more suited for developers and technical users who can handle its steep learning curve.

Although it’s available for free, Scrapy provides a robust toolset for managing sessions and processing data that comes in handy when it’s time to parse and analyze the data. Users can export the data in various file formats such as CSV, JSON, and XML.

Pros

- Great customization options

- Can handle complex and large-scale scraping

- Fast and efficient output

- Free and open-source

Cons

- Setup and configuration can be hard for beginners

- Requires programming knowledge

Octoparse: Best for Non-Programers

Octoparse allows users to extract data from websites and create scrapers without the need for any coding. It’s a web scraping platform that relies on visual language to offer user-friendly features for beginners without coding skills. Using Built-in CAPTCHA-solving capabilities, proxy rotation, and IP masking, you can bypass many obstacles placed by protected websites.

Octoparse offers cloud storage for large-scale scraping and can handle dynamic content from JavaScript-based websites. Moreover, Octoparse has AI features such as Auto Detect that make the web scraping experience faster and smoother. Octoparse users can export the data in CSV, Excel, and databases.

Pros

- User-friendly interface perfect for non-coders and beginners

- Cloud storage

- Can handle dynamic content

Cons

- Limited customization options

- The pricing for advanced features can be high

Beautiful Soup: Best for Data Parsing

Beautiful Soup is mainly an HTML parser that is ideal for cleaning and navigating complex HTML structures. As a Python library, it creates a parse tree for documents and makes it easy to extract and analyze data. Users can easily integrate Beautiful Soup with other Python libraries.

The main features of Beautiful Soup include the ability to parse HTML and XML documents, navigate and modify the parse tree, and handle poorly structured or broken HTML files. Furthermore, it can easily be integrated with other libraries.

Pros

- Free and open-source

- Easily integrates with other tools

- Large community and extensive documentation

Cons

- Requires coding knowledge

- No cloud features (only local)

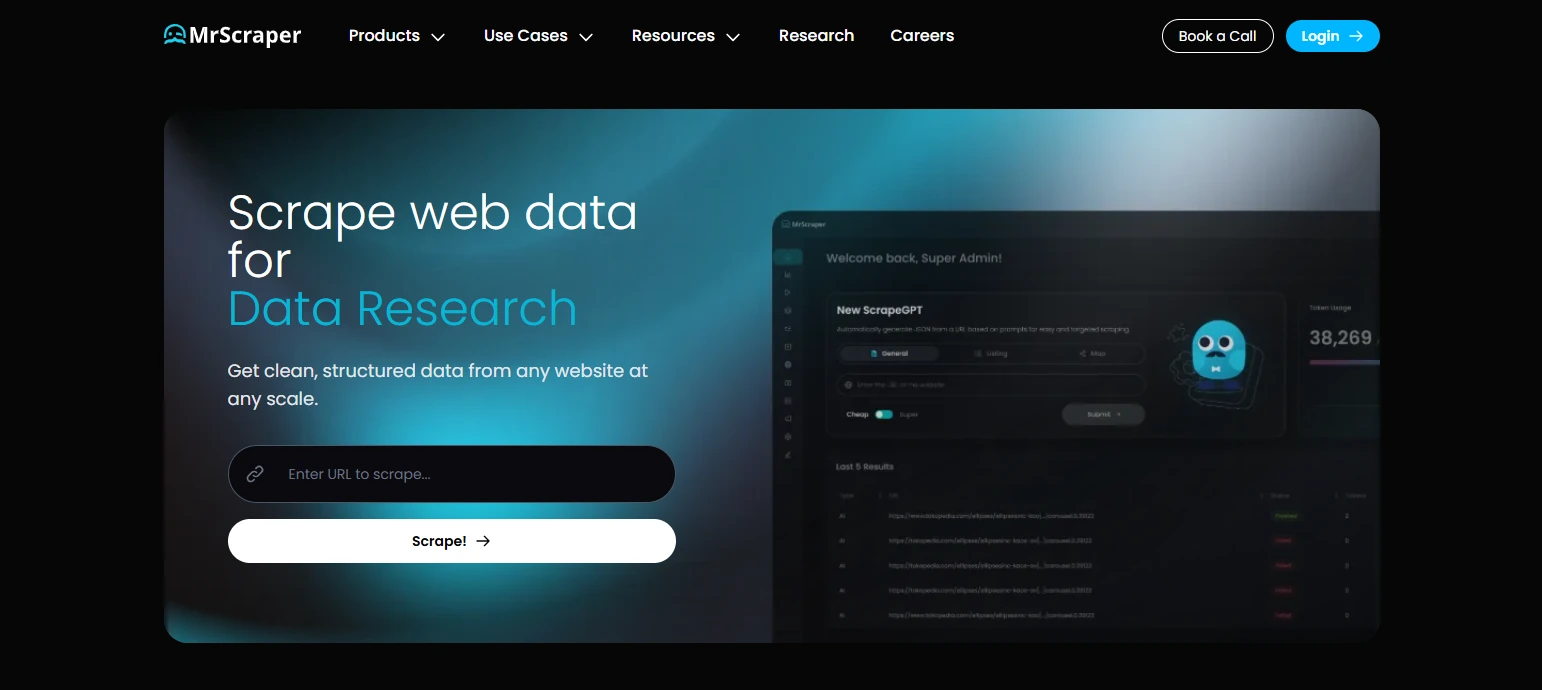

MrScraper: Best for Visual, AI-assisted Scraping

Mrscraper is a web scraping platform that helps businesses collect structured data from websites without building and maintaining complex scraping infrastructure. It supports large-scale data extraction with built-in proxy management, anti-blocking measures, and API access, making it suitable for use cases such as market research, lead generation, price monitoring, and competitive analysis.

What makes Mrscraper stand out is its balance between ease of use and scalability. Teams can request custom scraping solutions or integrate directly via API to automate recurring data collection workflows. This makes Mrscraper a practical option for companies that need reliable data delivery without having to manage scrapers, proxies, and browser automation themselves.

Pros

- Built-in proxy rotation and anti-blocking handling

- API access for automation and recurring data delivery

- Suitable for large-scale and long-term scraping projects

Cons

- Less hands-on control compared to fully custom-built scrapers

- Pricing may be higher than DIY solutions for one-off tasks

Is it Legal to Web Scrape?

No, the act of web scraping is not illegal. However, users should be aware that web scraping websites that explicitly prohibit scraping can lead to legal issues. Moreover, using malicious bots and web scraping private data can violate laws in some regions. If you avoid these issues, you can legally web scrape and be among the many companies and individuals who benefit from efficiently collecting data.