Automated Web Scraping in Any Language

Tutorials

Justas Vitaitis

Automated web scraping is a business superpower. It allows you to extract insights from vast amounts of data in a fraction of the time.

All businesses need information to make decisions. Manual data collection is expensive and prone to errors. For this reason, collecting data automatically is vital to stay on top of the market trends.

You can monitor competitor prices, supplier prices, news outlets, product releases, product reviews, social media mentions, headhunt talent and more. Web scraping is a perfect tool to do all of this.

But it can do more.

You can get all this data and use it to create pages automatically to rank on Google.

This post is actually the second part of our four-part series on building a programmatic SEO site. In it, you will learn automated web scraping. But instead of just learning it, you can use it to build a programmatic site as well.

Today, you’ll learn how to scrape data from any site using any programming language. Additionally you’ll learn how to set up your backend so it runs automatically, getting data, dealing with errors, and publishing new content.

If you are curious, here are the four main topics we are covering in this series:

- A programmatic SEO blueprint

How to pick the right programming language and libraries depending on your target sites, how to set up the backend for automated web scraping, and the best tools for web scraping. - How to perform automated web scraping in any language

How to pick the right programming language and libraries depending on your target sites, how to set up the backend for automated web scraping, and the best tools for web scraping. - How to build a Facebook scraper

Here, we dive into how to collect data from our target site, how to collect data from other sites (such as Amazon), how to process data, and how to deal with errors. - Easy programmatic SEO in WordPress

In the last part of the series, you will learn how to use your scraped data to build a WordPress site.

Let’s get started!

What Is Automated Web Scraping?

Automated web scraping is the process of extracting data from websites on an automated schedule or based on triggers. Therefore, you can use it to pull data from websites automatically, when a specific action happens (for example, a new post) or on specific dates and times of the day.

Web scraping , by itself, is the process of loading data from sites, reading them like a human would do, but using code to do so. Automated web scraping is using this tool, taking it a step further by starting the scraping process automatically as well.

The Programmatic SEO Series

To quickly recap, in this four-part series, you are building a programmatic site to rank high on Google. In the first part on programmatic SEO, you saw how to plan your site and how to find good keywords to rank for. There, we’ve found a great keyword cluster for Facebook marketplaces in specific cities, with the potential for millions of searches.

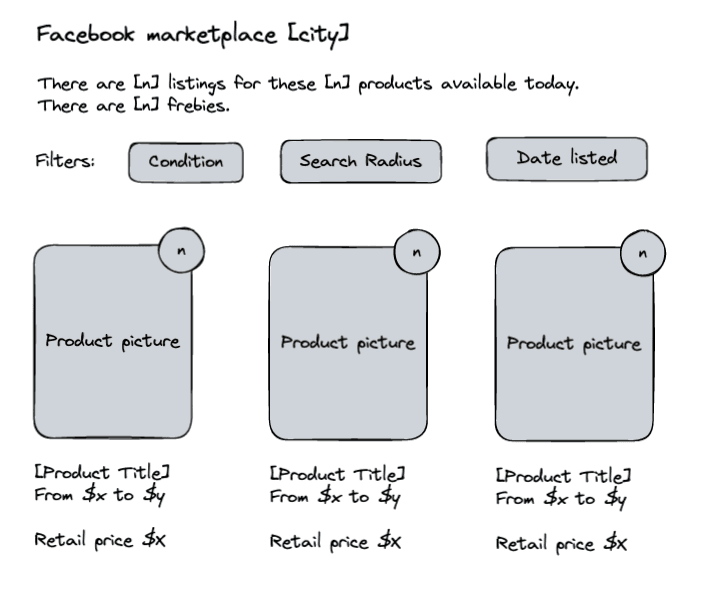

Therefore, we are building a site that allows users to see a list of pre-filtered products that are available in the marketplace for their city. So instead of seeing a plethora of posts, they’ll see specific products that are available in their city, similar to a regular e-commerce site. It will look like this:

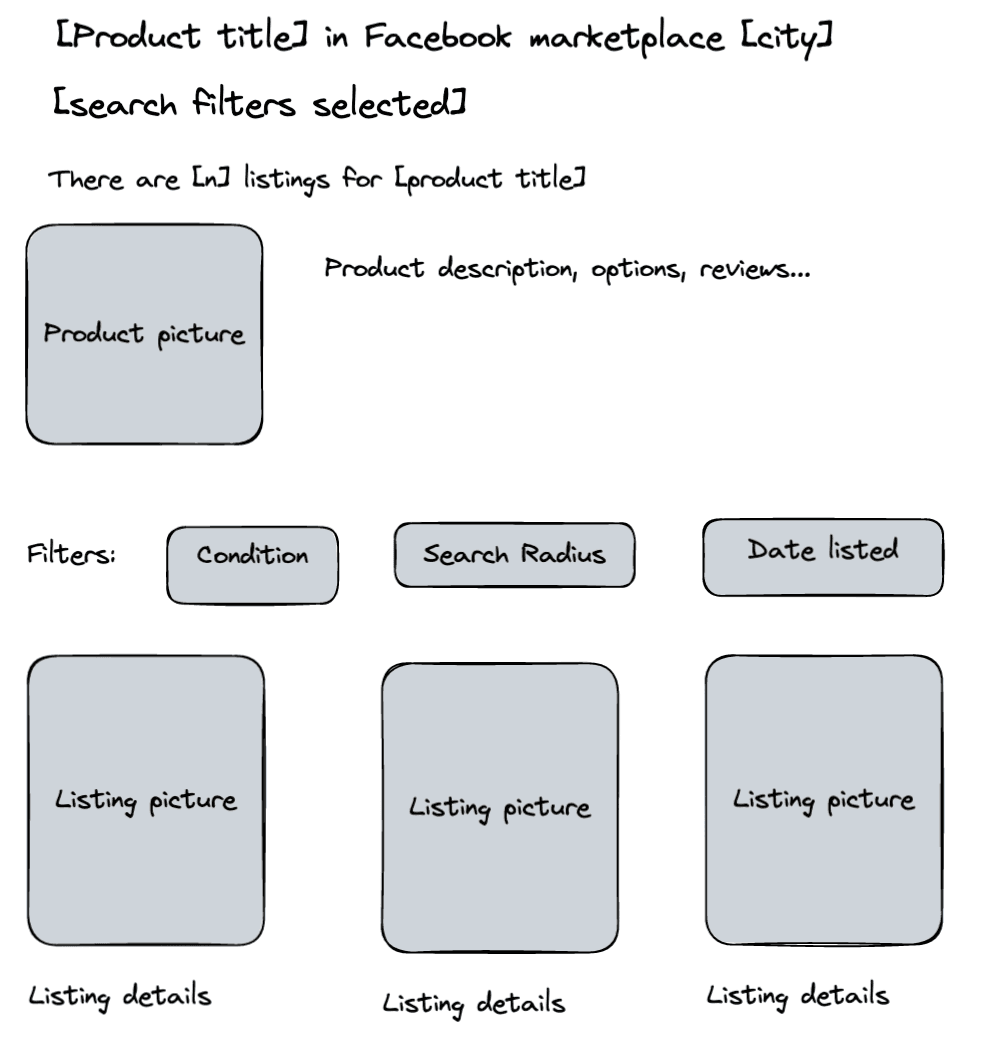

And when they click on one of these pictures, they see details about the product along with listings that include it:

Now we need to define what kind of backend we will use to implement this site, so that we can gather data from Facebook marketplaces and Amazon.

What Are the Risks of Web Scraping?

Web scraping brings some risks for those who are performing it. You can get blocked from your target sites, you can get sued for copyright claims, and Google can punish your site if you are using spam content. But all these risks can be mitigated.

You can avoid getting blocked by using a service such as IPRoyal’s Residential proxies . With it, you use a different IP address for each request. Therefore, website owners won’t know you are loading more than one page. From their point of view, these are two different visitors from completely different places in the world.

In addition, there are other methods to avoid getting blocked, and we dive deeper into it when we are actually scraping your target’s data. In case you are curious, here are some actions you can take:

- Use a headless browser

This ensures that requests include metadata that bot requests usually don’t include. - Add random intervals between requests

This makes your requests imprecise, mimicking human behavior. - Remove query arguments

Some URL parameters can be used to identify a user, make sure you remove them.

There’s a simple solution to copyright claims: don’t publish copies of other peoples’ intellectual property. Don’t use their images and don’t use their text blocks unless you have their consent. You can collect data points and use these texts in your analysis. But don’t just clone their content on your site. As long as you don’t do that, you are all set. Web scraping is 100% legal , and they have no grounds to sue you for that.

And finally, there’s the platform risk. Google sometimes punishes programmatic websites for spam content. And this one has a simple solution as well: don’t build a spam website. As long as your site has valuable content to visitors, it doesn’t matter if your site is programmatic or not.

How to Automate Web Scraping?

You can automate web scraping with four simple components: A trigger, a target database, a scraping library, and a results storage. Let’s dive into each of these components, so you can plan your automated web scraping efforts.

Automated Web Scraping Triggers

The triggers start the web scraping process. They can be a piece of code that runs on specific conditions or even a no-code tool that runs the web scraping scripts for you. You can set up three types of web scraping triggers:

- Manual triggers

- Automated triggers

- Schedules

When you run your web scraper manually, that’s a manual trigger. It can be something as simple as executing the code, or it can be more complex, such as building a webhook that allows you to run it remotely. For example, this is a manual trigger:

node scraper.js

And you can build a manual trigger that runs when you visit a URL:

mysite.com/webscrape/?mykey=PASSWORD&type=SCRAPINGOPTIONS

This URL would run your web scraper using the options you’ve set in it.

Regarding automated triggers, you can run your web scraper when specific actions happen. For example, you might want to run your scraper to get new leads when you close a project in your CRM.

In terms of implementation, it depends on what you are doing and when it happens, but it could be a terminal command as well as a call to a webhook or API , just like the previous example.

Schedules are the most popular triggers for automated web scrapers. You can use server tools such as cron to run your web scraper on specific dates and times. Or you could schedule them on your computer using apps. These tools work by connecting with the system clock, so they are very reliable.

Nonetheless, it’s important to implement monitoring tools along with your triggers to ensure that any firing errors or execution errors are closely monitored.

For your programmatic website, you are going to use the cron tool. With it, we are updating listings for the Facebook marketplace offers every day, as well as the retail prices for products on Amazon.

How to Save Your Automated Web Scraping Rules

Now you need to store a database of your web scraping rules, URLs, profiles, and other options you may want to store. This database can be as complex as you want it to be. You can add multiple options or just a list of URLs and selectors.

In our example, we have two main data points to store, the products we want to scrape from Amazon and the Facebook marketplaces we want to scrape (which cities).

The products table contains the following:

- ID

- Product name

- URL

- Details

- PictureURL

- Review stars

- ScrapingRule

The Facebook Marketplace table contains the following:

- ID

- City name

- City coordinates

- URL

- ScrapingRule

To improve performance a little bit, let’s store the Facebook marketplace listings on a separate table:

- ID

- City_ID

- Product_ID

- URL

- Price

- Coordinates

- AuthorName

- PictureURL

- ScrapingRule

These tables can be as big as you want, and you can add many more data points if you want. But that should be a good starting point. You can use any database you want to store this data, even Excel or Google Sheets. In our example, we are building the tables along with the web scraper itself in the next article of this series.

How Do I Scrape a Dynamically Loaded Website?

The best way to scrape a dynamically loaded website is by using a headless browser . With it, you can control a real browser using code, mimicking user actions. This allows you to do anything a real user would do, from reading content to taking screenshots, filling in forms, and even executing JS code. They can load any type of page, as opposed to other libraries that can only load simple static sites.

When it comes to picking the best programming language or library, there are many points to consider.

A good way to think about this is to focus on libraries that don’t depend as much on a specific language. This might sound weird, but a lot of libraries are present in multiple languages, such as Playwright.

If you learn how to use Playwright, you can use the same base syntax, no matter which programming language you pick. It’s like learning English. If you move to the UK or Australia, you’ll get by just fine. You just need to learn a few slang phrases and a few idioms.

A library like Playwright is incredibly flexible, and if you ever need to move to a different programming language, you can easily port your code to it.

For example, this is how you create a basic scraper with Playwright in Java:

public static void main(String[] args) {

try (Playwright playwright = Playwright.create()) {

BrowserType.LaunchOptions launchOptions = new BrowserType.LaunchOptions();

try (Browser browser = playwright.chromium().launch(launchOptions)) {

BrowserContext context = browser.newContext();

Page page = context.newPage();

page.navigate("https://ipv4.icanhazip.com/");

page.screenshot(new Page.ScreenshotOptions().setPath(Paths.get("screenshot-" + playwright.chromium().name() + ".png")));

context.close();

browser.close();

}

}

}

}

And this is how you do it in JS:

import { test } from '@playwright/test';

test('Page Screenshot', async ({ page }) => {

await page.goto('https://ipv4.icanhazip.com/’);

await page.screenshot({ path: `example.png` });

});

Notice that the languages are quite different, but the general methods are the same. You create a new browser, use the goto method, and use the screenshot method. You just need to learn these once, and you can use them in any programming language you want.

Therefore, we will implement our programmatic site using Playwright, as it allows you to scrape complex sites like Facebook and Amazon using residential proxies to avoid getting blocked and using login credentials to read the marketplace data.

We will dive into the specifics of how to extract data from your target site in the next post of this series.

How to Process Automated Web Scraping Content?

Now you have collected data from your target, you need to process it. This is the point where you run your analysis, generate reports, and make decisions. In our demo, this is when you get scraped data and push it to your databases so that they are used in your programmatic site.

There are many ways to implement this. You can use Excel or Google sheets and a WordPress plugin to import data. You can also write data to your database directly, or use the WP rest API to store this data.

We are going to dive deeper into how to create a programmatic site with WordPress in the last step of our series.

Conclusion

Today you learned how you could plan your backend for automated web scraping. You’ve learned how you can use a library such as Playwright to scrape data from any site in any programming language.

We hope you’ve enjoyed it, and see you again next time!

FAQ

Is web scraping better than API?

Web scraping is better than APIs in the sense that you aren’t limited to the data that the API provides you. In addition, you can collect data from any site you want using web scraping, APIs are limited to a specific site.

On the other hand, web scraping is usually more complex, and you need to take into account other factors, such as getting blocked.

How to do automated web scraping in Python?

You can perform automated web scraping with Python by using scripts and spiders. You can use a library such as Playwright to help you, or even use other libraries to help you. The overall process consists of a trigger (an action to start the scraping job - such as a cron schedule), a target database (containing URLs and scraping rules), a scraping library, and a results database.

How do you automate screen scraping?

You can automate screen scraping with a code library such as Playwright. You can use a trigger (manual or schedule), a database with URLs and screen scraping rules, the code library (such as Playwright) and a way to organize the screen scraping results, such as a script to organize files and folders.