How to Download Images With Python?

Vilius Dumcius

In This Article

The Requests library is a common tool for downloading the HTML code of web pages when doing web scraping. But did you know you can also use it to download and scrape other types of information, such as pictures?

This article will show you how to download an image using Python. It will cover three libraries: Requests, urllib3, and wget. It will also show how to use proxies to keep your IP hidden while scraping images.

Downloading Images With Requests

The best tool to use for downloading images while scraping is the tool you’re most likely using already—Requests. It’s a powerful and widely-used Python library for making HTTP requests and handling responses.

While Requests excels at fetching data from web pages and APIs, it doesn’t directly handle images. But don’t worry—Python has built-in tools to handle these issues.

All you need to do to download an image with Requests is:

- Request the file using Requests.

- Write the contents of the response into an image file.

Let’s say you want to download a book cover from Books to Scrape , a web scraping sandbox. Here’s how you can do it.

First, import the library.

import requestsThen, make a variable holding the URL of the image you want to download.

url = 'https://books.toscrape.com/media/cache/2c/da/2cdad67c44b002e7ead0cc35693c0e8b.jpg' Request the image using requests.get().

res = requests.get(url)Finally, write it into a file.

with open('img.jpg','wb') as f:

f.write(res.content)You do need to be careful not to use the incorrect extension with this method, though. For example, trying to write a PNG file into a JPG file will result in an unreadable file.

Here’s how you can solve this. If you’re getting the URLs from a page, you can extract the file name and/or extension from the URL and use that as the file name for the downloaded file.

def extract_name(url):

file_name = url.split("/")[-1]

return file_name

with open(extract_name(url),'wb') as f:

f.write(res.content)This will make sure that the file contents match the name.

In addition to image downloading, Requests provides a lot of additional functionality. To learn more about the library, you can read our full Python library guide .

Using Proxies With Requests

Nobody will bat an eye if you download an image or two with a script. But once you decide to download, for example, the whole collection of book covers instead of just one, you need to be careful. Website administrators are usually not big fans of large-scale scraping activity, especially when it involves loading sizable elements such as images.

To avoid detection, it’s essential to use a proxy. Proxies act as a gateway between you and the website you’re accessing, enabling you to mask your IP address.

Adding a proxy to a Requests request is simple. All you need is a proxy provider. The example below will use IPRoyal residential proxies . This service provides you with a new IP for every request you make, making it quick to set up and safe to use.

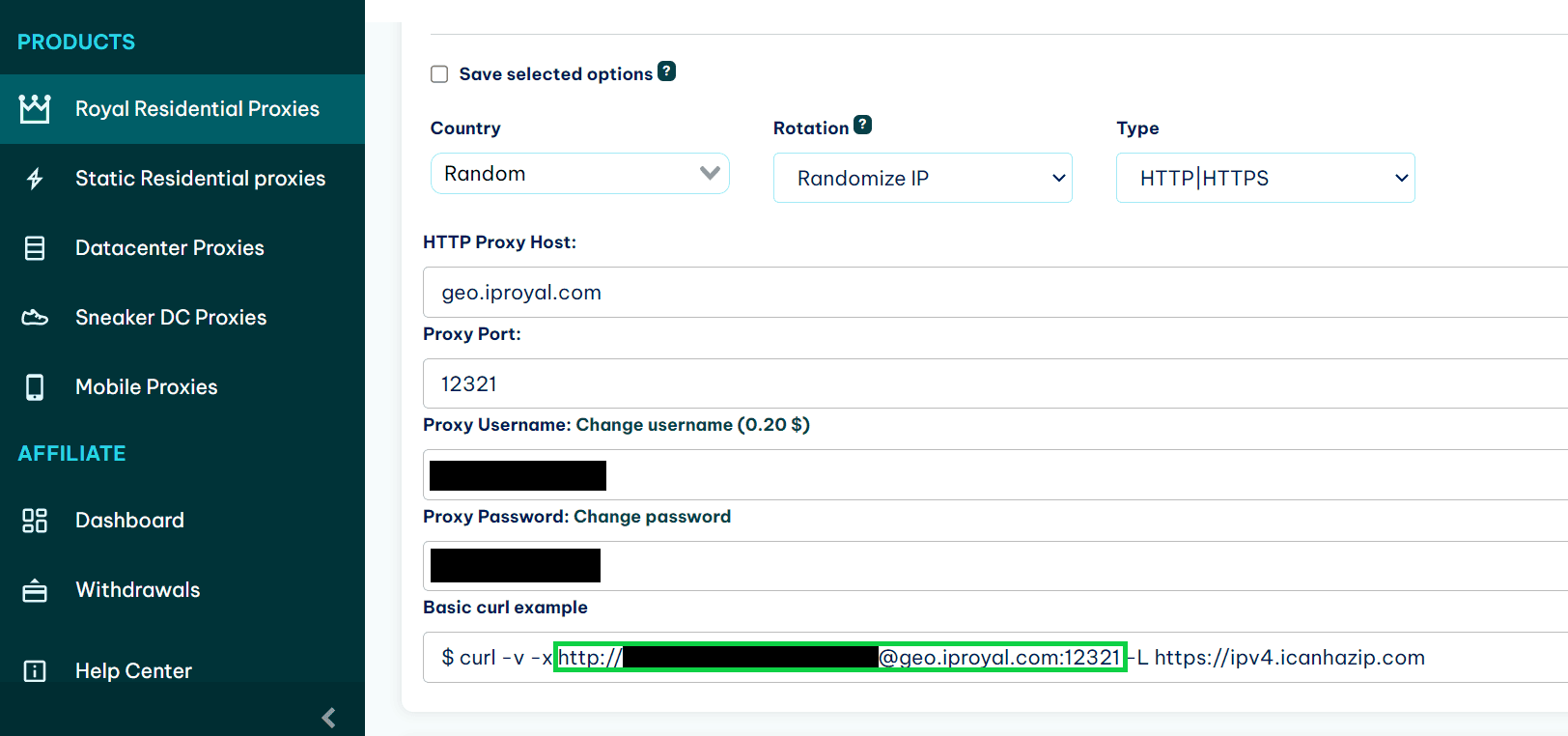

First, you need to get hold of the link of the proxy. If you’re using IPRoyal, you can find it in the dashboard underneathResidential proxies.

Afterward, create a dictionary in your script to hold the proxy links.

proxies = {

'http': 'http://link-to-proxy.com',

'https': 'http://link-to-proxy.com'

}Then you can provide the dictionary to the requests.get() function via the proxies parameter.

response = requests.get(

url,

proxies=proxies

)Now you will be able to download images without being detected by the owners of the website.

If you got lost at any point, here’s the full code for downloading an image from a website using a proxy:

import requests

def extract_name(url):

file_name = url.split("/")[-1]

return file_name

proxies = {

'http': 'http://link-to-proxy',

'https': 'http://link-to-proxy'

}

url = 'https://books.toscrape.com/media/cache/2c/da/2cdad67c44b002e7ead0cc35693c0e8b.jpg'

res = requests.get(

url,

proxies=proxies

)

with open(extract_name(url),'wb') as f:

f.write(res.content)Downloading Images With Urllib3

Urllib3 is an HTTP client library for Python, much like Requests. In fact, Requests uses urllib3 under the hood for its functionality. Both libraries are very similar, and the choice of which one to use often falls on the one that you already use in your workflow.

If you use urllib3 instead of Requests, here’s how you can download images with it.

First, import the library:

import urllib3Then, create a variable with the URL of the image you want to scrape.

url = 'http://books.toscrape.com/media/cache/2c/da/2cdad67c44b002e7ead0cc35693c0e8b.jpg'After that, download the image:

res = http.request('GET', url)Now you can extract the name of the file from the URL and save it into a file.

def extract_name(url):

file_name = url.split("/")[-1]

return file_name

with open(extract_name(url),'wb') as f:

f.write(res.data)Here’s the full code for convenience:

import urllib3

url = 'http://books.toscrape.com/media/cache/2c/da/2cdad67c44b002e7ead0cc35693c0e8b.jpg'

res = urllib3.request('GET', url)

def extract_name(url):

file_name = url.split("/")[-1]

return file_name

with open(extract_name(url),'wb') as f:

f.write(res.data)Using Proxies With Urllib3

Like with Requests, intensive use of urllib3 for downloading images requires using proxies to hide your activities from the website owners.

Unfortunately, using proxies with urllib3 is a bit more complicated than using them with Requests. This is especially true when you want to use authenticated proxies—proxies that require you to supply an username and password to access them.

To use authenticated proxies with urllib3, you’ll need three things:

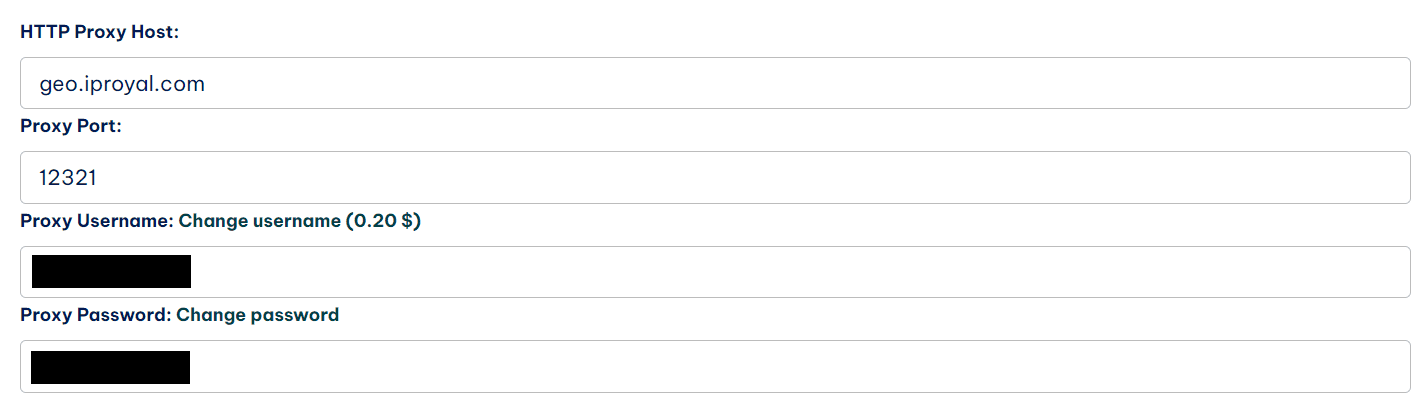

- URL and port of your proxy provider. It should look somewhat like this:

http://geo.iproyal.com:12321 - Your username.

- Your password.

If you’re using IPRoyal residential proxies , you can find this information in your dashboard.

First, make a header for proxy authorization using the urllib3.make_headers() function. Replace username and password with the actual username and password you’re using.

default_headers = urllib3.make_headers(proxy_basic_auth='username:password')Then, create a new instance of the ProxyManager object. It will make sure that all requests will use the proxy. Replace the proxy host and port with the details from your dashboard.

http = urllib3.ProxyManager('http://proxy-host:port', proxy_headers=default_headers) Now you can call the .get() method on the http object to connect to websites using the proxy.

url = 'http://books.toscrape.com/media/cache/2c/da/2cdad67c44b002e7ead0cc35693c0e8b.jpg'

res = http.request('GET', url)The rest of the code is as it was without the proxy:

def extract_name(url):

file_name = url.split("/")[-1]

return file_name

with open(extract_name(url),'wb') as f:

f.write(res.data)Here’s the full script code for convenience:

import urllib3

default_headers = urllib3.make_headers(proxy_basic_auth='username:password')

http = urllib3.ProxyManager('http://proxy-host:port', proxy_headers=default_headers)

url = 'http://books.toscrape.com/media/cache/2c/da/2cdad67c44b002e7ead0cc35693c0e8b.jpg'

res = http.request('GET', url)

def extract_name(url):

file_name = url.split("/")[-1]

return file_name

with open(extract_name(url),'wb') as f:

f.write(res.data)Downloading Images With Wget

Wget is a Python library that wraps a popular Linux command-line utility, also called wget. Using the library, you can download all kinds of files (including images) with a nice interface that seamlessly fits with your Python code.

If you have an URL of a file, downloading it with wget is extremely easy.

First, import the library.

import wgetThen, create a variable to hold the URL of the image.

url = 'https://books.toscrape.com/media/cache/2c/da/2cdad67c44b002e7ead0cc35693c0e8b.jpg'Finally, run the wget.download() function on the URL to download the image.

wget.download(url)That’s it!

Using Proxies With Wget

Unfortunately, the Python library doesn’t offer functionality for proxies. In contrast to the other tools, it’s a bit simpler.

But if you need more flexibility and features, you can try out the command-line tool it's named after. It has plenty of features and even allows using proxies. To see how to run wget requests through proxies, you can check out our tutorial on Wget proxy command line . And to see how to call CLI tools via Python, you can check out this article .

Conclusion

With the help of either of the tools described in this article, it’s relatively easy to download images in Python. The exact choice of library doesn’t really matter a lot, so it’s better to pick a tool that you’re using already. But if you’re just starting out, we recommend Requests for ease of use and the experience that will be helpful in many other web-focused Python tasks.

To learn more about Requests and how it can be used for web scraping tasks, we suggest you read our extensive guide on the Python Requests Library .

FAQ

I can’t open images that I download with Python. What should I do?

This bug can arise from many issues, but the main one could be the fact that you’re saving the picture using the wrong extension. This will make a file that shows to be in an image format but is actually unreadable, just as recalling a text file to img.jpg would do.

TypeError: 'module' object is not callable

This is an error that can happen if you have an old version of urllib3 that doesn’t support the easier request syntax. To solve it, you can either update the library to a newer version or create a PoolManager in the way described in the user guide to handle the requests.

Tunnel connection failed: 407 Proxy Authentication Required

This error means that you didn’t succeed in authentication with your proxy provider while scraping. This is a common issue with urllib3, and the best solution for that is to follow our suggestion on how to use proxies with urllib3 step by step.

Author

Vilius Dumcius

Product Owner

With six years of programming experience, Vilius specializes in full-stack web development with PHP (Laravel), MySQL, Docker, Vue.js, and Typescript. Managing a skilled team at IPRoyal for years, he excels in overseeing diverse web projects and custom solutions. Vilius plays a critical role in managing proxy-related tasks for the company, serving as the lead programmer involved in every aspect of the business. Outside of his professional duties, Vilius channels his passion for personal and professional growth, balancing his tech expertise with a commitment to continuous improvement.

Learn More About Vilius Dumcius