How to Use ChatGPT for Web Scraping

Gints Dreimanis

In This Article

If web scraping is not your primary area of activity, writing a simple web scraping script can take an annoyingly large amount of time. You need to learn (or remember) how to use libraries like Beautiful Soup . And if you don’t use a scripting language like Python or JavaScript regularly, you might need to refresh your knowledge of their syntax.

But what if there were a tool that could speed up the development process, allowing you to focus on the data rather than the code?

In this article, you’ll learn how to use ChatGPT for writing web scrapers. The article will cover the benefits and downsides of using ChatGPT for web scraping and show techniques that can be helpful for developers looking to increase their development speed with ChatGPT.

What Is ChatGPT?

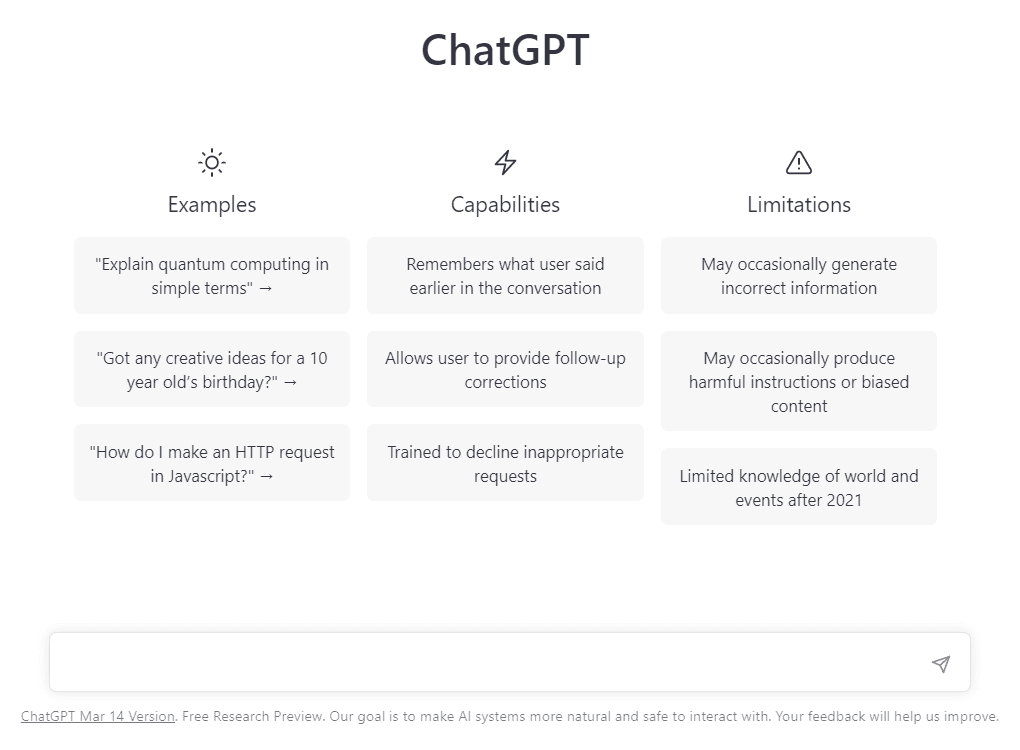

ChatGPT is a highly advanced chatbot created by OpenAI that uses machine learning to process natural language and generate human-like responses.

Due to its unprecedented ability to understand and generate natural language, it can do many tasks on a nearly human-like level: answer questions, generate full-length articles, and even write code.

Currently, it’s in beta testing and available for free to anyone who might be interested.

Interactions with ChatGPT follow a prompt-response pattern: you write a prompt containing a message or a task you want ChatGPT to accomplish, and it supplies you with an answer. ChatGPT stores the previous prompts and their responses, so you can ask it to update or explain its answers.

How to Write Web Scraping Code With ChatGPT?

Since ChatGPT can write code, it can, naturally, write code that does web scraping. To try it out, go to the application and sign up/log in.

It will provide you with a prompt where you can ask questions to the chatbot.

Unfortunately, just asking it to create a web scraper won’t lead to a great result in most cases.

ChatGPT is a statistical language model trained on a large amount of textual data from the internet and not a natural language interface to a powerful no-code tool. Since ChatGPT can’t access the internet and can’t “look at” websites, there’s no way it could accurately write code for scraping the items you want from the page.

So, if you ask ChatGPT to write a web scraper by itself, there are two possible outcomes. The first one is that it has “read” tutorials for this particular task, in which case it might copy the contents of those tutorials.

The second option is worse: it will imagine some HTML code that could potentially be on the website and write web scraping code for this code. The resulting code will look convincing but won’t actually work.

That’s why you need to provide ChatGPT with more detailed instructions. Here are the things that are important to put in a prompt for a web scraping task:

- A link to the website you’re using.

- The kind of data that you want to scrape.

- CSS/XPath selectors that you can use to scrape this data.

Since ChatGPT acts more as an assistant for typing out boilerplate code than a no-code tool, this approach is not suited for complete beginners who cannot verify the resulting code.

Here’s an example prompt you could use to scrape the books from the front page of Books to Scrape :

I need to write a web scraper.

Here's the link to the website: http://books.toscrape.com/

I need to scrape the titles and the prices of the books and print them out in the console.

Each book title is in a h3 tag. The price of the book is in a p element with a price_color class.

Please write the code using Python.

Here’s the code that ChatGPT generates:

import requests

from bs4 import BeautifulSoup

# send a GET request to the website and get the HTML content

response = requests.get("http://books.toscrape.com/")

html_content = response.content

# create a BeautifulSoup object with the HTML content

soup = BeautifulSoup(html_content, "html.parser")

# find all the book titles (which are in h3 tags) and prices (which are in p tags with a class of "price_color")

book_titles = soup.find_all("h3")

book_prices = soup.find_all("p", class_="price_color")

# iterate over the book titles and prices and print them out

for i in range(len(book_titles)):

print(book_titles[i].text)

print(book_prices[i].text)

print()It also provides the following explanation of the code, which is more or less correct:

This code uses the requests library to send a GET request to the website and get the HTML content, and then uses the BeautifulSoup library to parse the HTML and extract the book titles and prices. Finally, it iterates over the titles and prices and prints them out.

Running the code results in a list of title names being printed out in the console.

A Light in the ...£51.77

Tipping the Velvet£53.74

Soumission£50.10

Sharp Objects£47.82

Sapiens: A Brief History ...£54.23

The Requiem Red£22.65

...

Improving Code Together With ChatGPT

Since ChatGPT remembers the context of the conversation, it’s easy to upgrade the code it has written by giving it additional suggestions and requests for improvement.

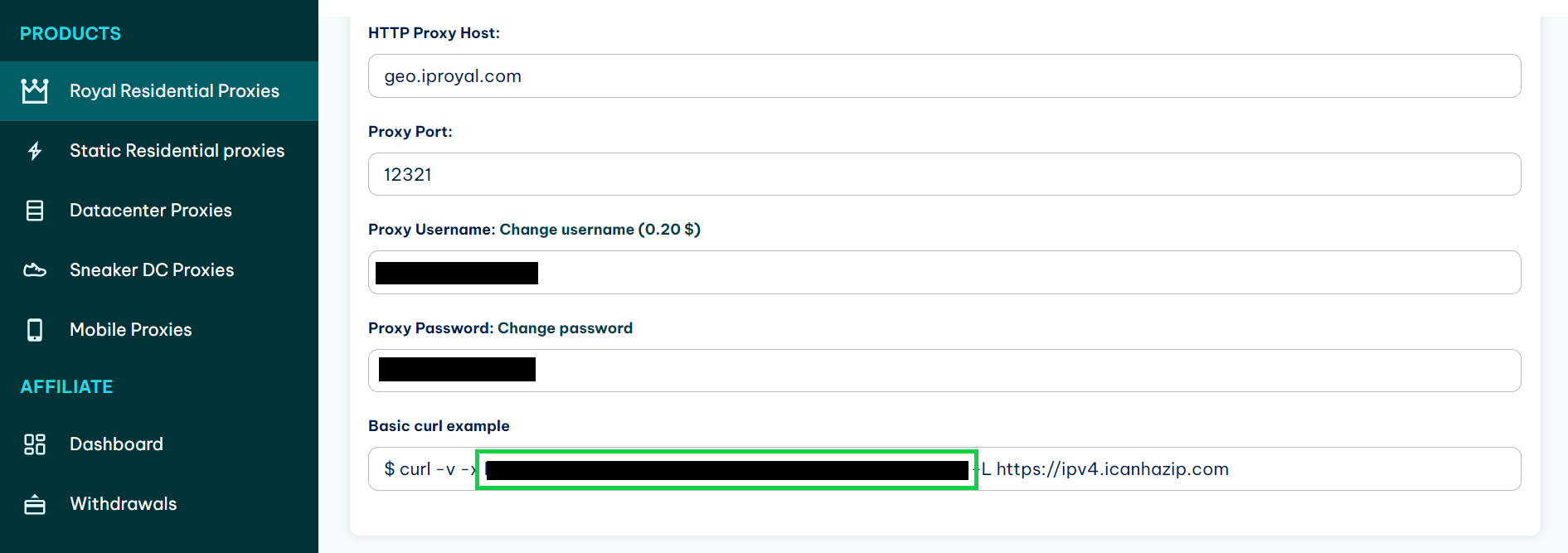

For example, web scraping scripts frequently use proxies to hide the IP address of the web scraper. This prevents your IP address from being blocked by website administrators that dislike your activities. In that place, the IP address of the proxy will be blocked. If you use a paid proxy like IPRoyal residential proxies that rotates the IP on every request, this is no issue.

To add a proxy to the script, all you need to do is to ask ChatGPT to do it. Since adding a proxy is not a very context-sensitive task, it should do just fine.

Here’s the prompt:

I have a link to a proxy I can use. Extend the code to include that.

And here’s the resulting code:

import requests

from bs4 import BeautifulSoup

# set up the proxy

proxies = {

"http": "http://your-proxy-address:port",

"https": "http://your-proxy-address:port"

}

# send a GET request to the website and get the HTML content using the proxy

response = requests.get("http://books.toscrape.com/", proxies=proxies)

html_content = response.content

# create a BeautifulSoup object with the HTML content

soup = BeautifulSoup(html_content, "html.parser")

# find all the book titles (which are in h3 tags) and prices (which are in p tags with a class of "price_color")

book_titles = soup.find_all("h3")

book_prices = soup.find_all("p", class_="price_color")

# iterate over the book titles and prices and print them out

for i in range(len(book_titles)):

print(book_titles[i].text)

print(book_prices[i].text)

print()And here’s the explanation that ChatGPT provides:

In this modified version of the code, we first set up a dictionary containing the proxy information (replace "your-proxy-address" and "port" with the appropriate values for your proxy). Then, when we send the GET request using the requests.get() function, we include the proxies argument and pass in the proxies dictionary. This tells the requests library to use the proxy when sending the request.

Everything else in the code remains the same as before.

All that’s left to do is to take the proxy link and paste it into the code.

If you’re using IPRoyal, you can find the link in your dashboard.

Note: A link to a proxy can contain sensitive information such as your username and password. Do not share the information with other entities, including ChatGPT. While Open AI cares about the privacy of your conversations, there have also been instances of chat histories leaking to other users.

Tips for Making ChatGPT Coding Prompts

Writing code with ChatGPT might seem odd at first since it’s mostly used as an AI writer , but you’ll become more and more proficient as you gain more experience and learn what it can and cannot do. As a baseline, you can look at it as an assistant junior developer that you can use every time you wish, and that doesn’t get tired.

The more specific instructions you give to it, the better. But due to the conversational nature of the interaction, you can always start with a specific prompt and then work on it with ChatGPT, improving the result along the way.

While ChatGPT is very confident, it’s important to always double-check (or test) its output. It can be catastrophically (but oh so confidently) wrong. In general, it’s best for simple tasks and writing boilerplate.

Finally, remember that ChatGPT currently cannot access the internet and that its knowledge comes from statistical inference, not from having some knowledge encoded inside as code. Therefore, it cannot serve as a no-code tool for beginners that cannot understand and verify its output.

Conclusion

ChatGPT is a new but powerful technology that can do all kinds of awesome things.

In this article, you learned how you can use ChatGPT to write web scraping scripts. While it cannot be trusted to write its own web scrapers, it can increase your development speed significantly. In a sense, it works like an autocomplete on steroids.

For more tips on using ChatGPT for writing and working with code, you can read this article .

FAQ

Can ChatGPT write web scraping code for me?

Yes and no. While ChatGPT can generate web scraping code according to specifications, it cannot write a web scraper on its own. This is because it doesn’t have access to the internet or a rendering engine, so it has no ways to convert natural language requests such as “titles”, “prices”, etc. into CSS/XPath selectors for elements on the specific website.

What downsides are there to writing code with ChatGPT?

Code written with ChatGPT can be inefficient, wrong, or even nonsensical, even if ChatGPT is very confident about its correctness. That’s why it’s important to verify the code that it generates before using it. If you do not have the capabilities of verifying ChatGPT-generated code, it’s better to not use it for generating code.

What kind of code is ChatGPT good for?

ChatGPT is good for writing small, local pieces of code that don’t require a lot of context. It can also be used for code completion and documentation. It’s quite bad at writing applications or code that requires a lot of context.

Author

Gints Dreimanis

Technical Writer

Gints is a writer and software developer who’s excited about helping others learn programming. Currently the main editor of Serokell Blog , where he’s responsible for content on functional programming and machine learning.

Learn More About Gints Dreimanis