How to Scrape a Website That Requires a Login: Python Tutorial

Python

Eugenijus Denisov

Some data online is only displayed to users that are logged in. Sending regular GET requests, like when scraping publicly available data, won’t cut it – you need to create a session first to log in.

Other than creating the session and logging in, the process isn’t that much different from regular web scraping. Take note, however, that for most websites, you have to accept the Terms and Conditions when you register or log in. If they forbid web scraping, you can’t scrape such a website.

How To Scrape Data Locked Behind A Login?

Some websites put data behind a login for various reasons. A forum or social media website will likely want you to register to provide ad revenue opportunities for them and a permanent identity for you.

Other websites, such as ecommerce stores, may ask you to log in to provide you a better UX experience and marketing opportunities for them. Some websites may even be akin to gated communities where you can’t see any data if you’re not logged in.

Web scraping , assuming it’s allowed by the Terms of Service, under a login is largely no different from publicly available data. There’s only one additional step added if you already have an account – a session object that stores all the login information.

The difficulties that arise are various CAPTCHA challenges or 2FA restrictions. For the latter, it’s often better to remove it for web scraping purposes while the former can be solved by dedicated services or by constantly switching IPs until the login is achieved.

Step 1: Inspect the Website

Most websites use the same logic for the login process. It’s usually an HTML login form that sends POST HTTP requests to some endpoint. The login process payload usually includes a username and password or an email and password.

You’ll need to figure a few things out first:

- The URL that authenticates the login process.

- The method used to log in (it’s almost always a POST request).

- Name of both username and password forms.

You can find these on the login form page by either using the browser’s Developer Tools (usually F12) or by using the “Inspect element” option on a page.

We’ll be using a test login page for our web scraping purposes. But before we can even try logging in, we need to set up our Python environment.

Step 2: Set up your Python Environment

We’ll be using the Requests library for sending both GET and POST HTTP requests, while Beautifulsoup4 will be useful for parsing the data we retrieve:

pip install requests beautifulsoup4

After that, importing both libraries is how we get started:

import requests

from bs4 import BeautifulSoup

Step 3: Sending a POST Request With Credentials

Our test login page has the username and password listed in the form (practice and SuperSecretPassword!), so we’ll be using those.

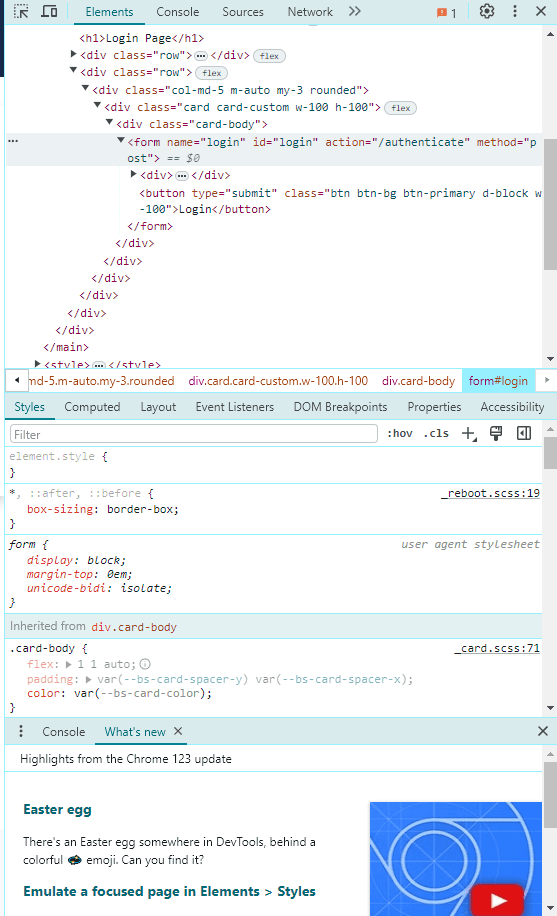

However, we do have to figure out the rest of the details listed above. All of that can be done through Developer Tools. Press **F12, a pop up window will appear to your right. **Head over to the “ Elements ” tab.

In that screenshot, we can see the form name, id, and, most importantly, the “action=” tag, which is where the authentication URL is usually stored. In this case, it’s “/authenticate”.

Additionally, we should take a look at the other two elements and expand them. They will hold the username and password field names. It’s usually nested as:

<input type="text" name="username">

<input type="password" name="password">

Luckily, in this case, that’s exactly the same – they’re called “username” and “password”.

With all of that information in hand, we can write a script that will send a POST request to the login page:

import requests

from bs4 import BeautifulSoup

## Create a session object

session = requests.Session()

<SignUpSection

class="mb-40 lg:hidden"

title='Ready to get started?'

linkHref='https://dashboard.iproyal.com/register/'

linkLabel='Register now'

/>

## Add our login data

login_url = 'https://practice.expandtesting.com/authenticate'

credentials = {

'username': 'practice',

'password': 'SuperSecretPassword!'

}

## Send a POST request to our endpoint

response = session.post(login_url, data=credentials)

if response.ok:

print("Login successful!")

else:

print("Login failed!")

We start, as mentioned above, by creating a session object. They allow us to maintain consistent parameters throughout a period of time, which is essential when working with any login page.

After that, we’ll use all of the login data we acquired through Developer Tools. The URL was listed as “/authenticate” in the HTML code, meaning we have to attach that to the home page.

We also create a credentials dictionary that allows us to enter key-value pairs. Our keys will be the name of the form, and the values will be the login credentials.

Finally, we send a post request with the login data as our arguments and print the result. If you get an error, you can “print(response)” to see what type of HTTP error code was received.

That covers a simple login process. If a website you’re logging in requires a CSRF token, which is quite common nowadays, you will need to work around it by sending a GET request first to retrieve data from headers or use CSS selectors if the token is available in the HTML.

A CSRF token may also be saved in your cookies. There are many ways you can work around these issues, but it’s often a good idea to simply switch to browser automation such as Selenium if your web scraping project is small.

Step 4: Scraping Data

Once we finish logging in, we can then use the same session object to send GET HTTP requests. You have to, however, first send a POST request each time you execute the code after exiting, otherwise the page might be unreachable.

Since our test site only has one page that’s inaccessible without logging, we’ll be using it for web scraping:

import requests

from bs4 import BeautifulSoup

## Create a session object

session = requests.Session()

## Add our login data

login_url = 'https://practice.expandtesting.com/authenticate'

credentials = {

'username': 'practice',

'password': 'SuperSecretPassword!'

}

## Send a POST request to our endpoint

response = session.post(login_url, data=credentials)

if response.ok:

print("Login successful!")

else:

print("Login failed!")

data_url = 'https://practice.expandtesting.com/secure'

data_page = session.get(data_url)

if data_page.ok:

print("Data retrieved successfully!")

# Use Beautiful Soup to parse HTML content

soup = BeautifulSoup(data_page.text, 'html.parser')

# Example of finding an element by tag

first_paragraph = soup.find('h1')

print("First text:", first_paragraph.text)

else:

print("Failed to retrieve data.")

At first, most of the steps are quite similar – we pick up our URL for scraping data and send a GET request using the session object.

We then use an “if” statement to verify if the response indicates success. If it does, we create a soup object that we’ll use to parse all of the content in the page.

There’s not a lot on the post-login page, so we’ll **capture the H1 and print it out. **Finally, if we didn’t receive a successful response, we print an error message.

Step 5: Handling Common Login Issues

There are a few issues you can run into when logging in and scraping. One of them is CAPTCHAs. These are frequent occurrences when web scraping any page.

If it happens during regular scraping, you’ll need to switch IP addresses and, likely, relog to avoid further tests. If it happens during the login process, you can try switching to browser automation to see if it avoids the CAPTCHA. If it doesn’t, you may need to use CAPTCHA-solving tools or services.

Two-factor authentication is another common hurdle. It’s usually possible to disable them through account settings on the website, so doing so is the easiest option. However, it’s highly recommended that you keep it enabled on your regular account, so make a throwaway for web scraping purposes.

Other than that, solving 2FA is a unique and highly customized solution, so it’s impossible to say how to solve it all the time.

Full Code Snippet

import requests

from bs4 import BeautifulSoup

## Create a session object

session = requests.Session()

## Add our login data

login_url = 'https://practice.expandtesting.com/authenticate'

credentials = {

'username': 'practice',

'password': 'SuperSecretPassword!'

}

## Send a POST request to our endpoint

response = session.post(login_url, data=credentials)

if response.ok:

print("Login successful!")

else:

print("Login failed!")

data_url = 'https://practice.expandtesting.com/secure'

data_page = session.get(data_url)

if data_page.ok:

print("Data retrieved successfully!")

# Use Beautiful Soup to parse HTML content

soup = BeautifulSoup(data_page.text, 'html.parser')

# Example of finding an element by tag

first_paragraph = soup.find('h1')

print("First text:", first_paragraph.text)

else:

print("Failed to retrieve data.")