Web Scraping With Selenium and Python

Justas Vitaitis

Last updated -

In This Article

Python libraries like Requests and Scrapy can be very useful when you need to scrape simple websites. But they are not suited for the modern web, where single-page applications driven by JavaScript are the norm. You can access a lot of information in these apps only by executing JavaScript in a browser.

For this reason, modern web scrapers use tools that simulate the actions of real users more closely, taking care to render the page just like it would be rendered for a regular user.

One of these tools is Selenium. In this tutorial. you’ll learn how Selenium works and how to use it to simulate common user actions such as clicking, typing, and scrolling.

What Is Selenium?

Selenium is a collection of open-source projects aimed at supporting browser automation. While these tools are mainly used for testing web applications, everything you can use to manipulate a browser automatically can be used for web scraping.

It can automate most of the actions a real person can take in the browser: scrolling, typing, clicking on buttons, taking screenshots, and even executing your own JavaScript scraping code .

Interaction happens through Selenium’s WebDriver API, which has bindings for the most popular languages like JavaScript, Python, and Java.

Selenium vs. BeautifulSoup

While you can perform a lot of basic scraping tasks in Python with Requests and BeautifulSoup or a scraping framework like Scrapy , these tools are very bad at handling modern web pages that heavily use JavaScript. To scrape these websites, you need to interact with JavaScript on the page. And for that, you need a browser.

Selenium enables you to automate these interactions. It will launch a browser, execute the necessary JavaScript, and you will be able to scrape the results. In contrast, an HTML-based parser can only return the JavaScript code that the page contains and not execute it.

Web Scraping With Selenium Tutorial

In this tutorial, you’ll learn how to use Selenium’s Python bindings to search the r/programming subreddit . You’ll also learn how to mimic actions such as clicking, typing, and scrolling.

In addition, we’ll show you how to add a proxy to the scraping script so that your real IP doesn’t get detected and blocked by Reddit.

In a previous tutorial on python web scraping , we covered how to scrape the top posts of a subreddit using the old Reddit UI . The current UI is less friendly to web scrapers. There are two additional difficulties:

- The page uses infinite scroll instead of pages for posts;

- The class names of items are obfuscated — the page uses class names like “eYtD2XCVieq6emjKBH3m” instead of “title-blank-outbound”.

But with the help of Selenium, you can easily handle these difficulties.

Setup

You’ll need Python 3 installed on your computer to follow this tutorial. In addition, you need to download WebDriver for Chrome that matches the Chrome version on your computer and unzip the file in a location of your choice. This is what the script will use to drive actions in the browser.

After that, you should also use the command line to install selenium, a library with Python bindings for WebDriver.

pip install seleniumAfter downloading the WebDriver and installing the bindings, create a new Python file called reddit_scraper.py and open it in a code editor.

Import all the required libraries there:

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.common.by import By

from time import sleepAfter that, define all that is necessary to launch the WebDriver. In the code snippet below, substitute “C:\\your\path\here\chromedriver.exe” with the path to the WebDriver you downloaded.

service = Service("C:\\your\path\here\chromedriver.exe")

options = webdriver.ChromeOptions()

driver = webdriver.Chrome(service=service, options=options)

driver.get("https://www.reddit.com/r/programming/")

time.sleep(4)

If you run the script right now, it should open up a browser and go to r/programming.

Accepting Cookies

When you start a session on Reddit with Selenium, you might be asked about your cookie preferences. This pop-up can interfere with further actions, so it’s a good idea to close it.

So the first step you want the script to do is to try to click on the “Accept all” button for cookies.

An easy way to find the button is by using an XPath selector that matches the first and only button with “Accept all” text.

accept = driver.find_element(

By.XPATH, '//button[contains(text(), "Accept all")]')After that, you must instruct the driver to click it via the .click() method.

If the pop-up doesn’t show up, trying to click it will lead to an exception, so you should wrap the whole thing in a try-except block.

try:

accept = driver.find_element(

By.XPATH, '//button[contains(text(), "Accept all")]')

accept.click()

sleep(4)

except:

passAs you can see, Selenium’s API for finding elements is very similar to the one used by both BeautifulSoup and Scrapy.

Interacting With the Search Bar

After you have gotten the cookie pop-up out of the way, you can start working with the search bar at the top of the page.

First, you need to select it, click it, and wait a little for it to become active.

search_bar = driver.find_element(By.CSS_SELECTOR, 'input[type="search"]')

search_bar.click()

sleep(1)After that, the script needs to type in a search phrase, wait a little bit for the text to appear, and then press the enter key. Finally, it should wait a bit for the page to load.

These are the lines of code do that:

search_bar.send_keys("selenium")

sleep(1)

search_bar.send_keys(Keys.ENTER)

sleep(4)In addition to time.sleep(), Selenium has more sophisticated tools for waiting for elements to appear, such as an implicit wait, which waits for a set amount of time for an element to appear, and explicit wait, which waits until some condition is fulfilled.

We won’t cover them in this article, but you’re welcome to explore them when writing your own scripts.

This is the full code for searching:

search_bar = driver.find_element(By.CSS_SELECTOR, 'input[type="search"]')

search_bar.click()

sleep(1)

search_bar.send_keys("selenium")

sleep(1)

search_bar.send_keys(Keys.ENTER)

sleep(6)Scraping Search Results

Once you have loaded search results, it’s pretty easy to scrape the results’ titles: they are the only H3s on the page.

titles = driver.find_elements(By.CSS_SELECTOR, 'h3')But if there are more than 25 or so results, you won’t get all of them. To get more, you need to scroll down the page.

A typical way of doing this is to use Selenium’s capabilities to execute any JavaScript code via execute_script. This enables you to use the scrollIntoView function on the last title scraped, which will make the browser load more results.

Here’s how you can do that:

for _scroll in range(1, 5):

driver.execute_script(

"arguments[0].scrollIntoView();", titles[-1])

sleep(2)

titles = driver.find_elements(By.CSS_SELECTOR, 'h3')The function above will scroll five times, each time updating the list of titles with the search results that are revealed.

Finally, we can print out the list of titles to the screen and quit the browser.

for title in titles:

print(title.text)

driver.quit()Here’s the full script:

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.common.by import By

from time import sleep

service = Service("C:\\your\path\here\chromedriver.exe")

options = webdriver.ChromeOptions()

driver = webdriver.Chrome(service=service, options=options)

driver.get("https://www.reddit.com/r/programming/")

sleep(4)

try:

accept = driver.find_element(

By.XPATH, '//button[contains(text(), "Accept all")]')

accept.click()

sleep(4)

except:

pass

search_bar = driver.find_element(By.CSS_SELECTOR, 'input[type="search"]')

print(search_bar)

search_bar.click()

sleep(1)

search_bar.send_keys("selenium")

sleep(1)

search_bar.send_keys(Keys.ENTER)

sleep(6)

titles = driver.find_elements(By.CSS_SELECTOR, 'h3')

for _scroll in range(1, 5):

driver.execute_script(

"arguments[0].scrollIntoView();", titles[-1])

sleep(2)

titles = driver.find_elements(By.CSS_SELECTOR, 'h3')

for title in titles:

print(title.text)

driver.quit()Adding a Proxy to a Selenium Script

When doing web scraping with Selenium or any other tool, it’s important not to use your real IP address. Web scraping is an activity that many websites find intrusive. So, the admins of these websites can take action to IP ban suspected addresses of web scrapers.

For this reason, web scrapers frequently use proxies — servers that hide your real IP address.

Many free proxies are available, but they can be very slow and pose privacy risks. So it’s much better to use a paid proxy - they are usually very cheap anyway and provide much better and more confidential service.

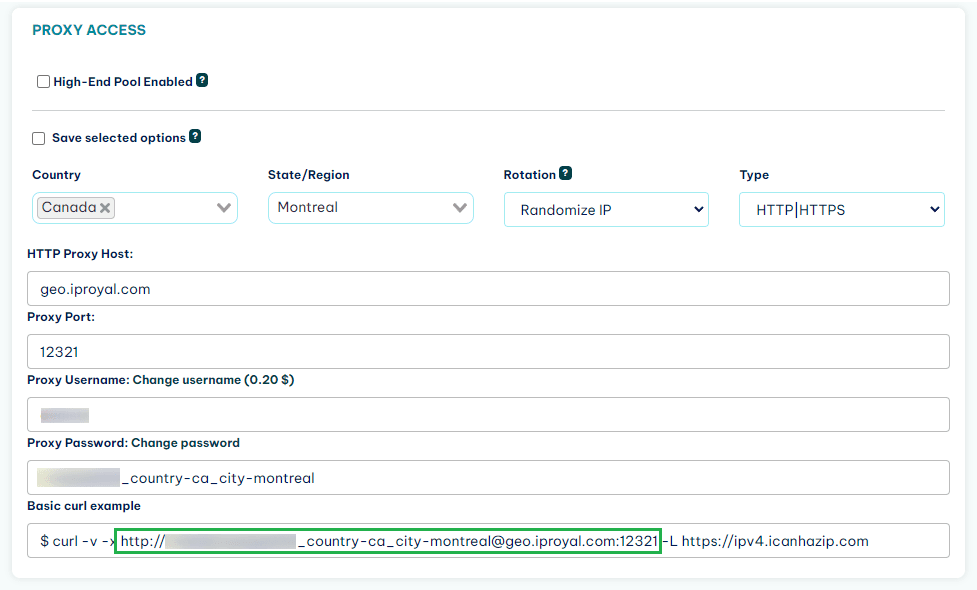

In this tutorial, you’ll use IProyal residential proxies . These are great for web scraping projects because they take care of rotating your IP on every request. In addition, they are sourced from a diverse set of locations, which makes it hard to detect your web scraping activities.

First, you need to find the link to your proxy. If you’re using IPRoyal, you can find the link in your dashboard.

Be careful! A proxy access link usually contains your username and password for the service, so you should handle it carefully.

Unfortunately, Chrome doesn’t support proxy links with authentication. For this reason, you’ll need to use another library called selenium-wire , which enables you to change the request header of requests made with Chrome to use a proxy.

First, install it using your command line:

pip install seleniumwireThen, go to the imports in your code and replace:

from selenium import webdriverAfter that, you’ll need to adjust the code that initializes the webdriver. Instead of initializing a service, we need to provide an r string with the location of the webdriver. In the options, we provide a proxy dictionary. In addition, the service and options are initialized a bit differently.

service = r'C:\\chromedriver.exe'

options = {

'proxy': {

'http': 'http://username:[email protected]:12321',

'https': 'http://username:[email protected]:12321',

}

}

driver = webdriver.Chrome(executable_path=service,

seleniumwire_options=options)

This is how the top part of your script should look:

from seleniumwire import webdriver

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.common.by import By

from selenium.webdriver.common.keys import Keys

from time import sleep

service = r'C:\\chromedriver.exe'

options = {

'proxy': {

'http': 'http://username:[email protected]:12321',

'https': 'http://username:[email protected]:12321',

}

}

driver = webdriver.Chrome(executable_path=service,

seleniumwire_options=options)The rest of the script should work the same with seleniumwire.

Now, each time you connect to Reddit, you will have a different IP address. If Reddit detects any activity, all they can do is ban one of those addresses, making it safe for you to keep browsing r/programming in your free time from your own IP.

Conclusion

In this tutorial, you learned how to use Selenium to do basic actions in your browser, such as clicking, scrolling, and typing. If you want to learn more about what you can do using Selenium, these video series are an awesome resource.

For more web scraping tutorials, you’re welcome to read our How To section .

FAQ

Can I use Selenium with non-Chrome browsers such as Firefox or Safari?

Do I need to have the browser running to scrape websites with Selenium?

Yes, but you don’t necessarily need the browser to render the pages graphically. Web scrapers frequently use headless browsers, which are browsers that are controlled through command line and not GUI. For more information on using Selenium in a headless mode, check out this guide .

What actions can Selenium perform?

The WebDriver API supports most user actions, such as clicking, typing, and taking screenshots. For a full list, you can refer to the API documentation for Python. In addition, the API enables you to execute any JavaScript code on request, which means that it can do whatever is possible in a real browser by a sophisticated user.

Author

Justas Vitaitis

Senior Software Engineer

Justas is a Senior Software Engineer with over a decade of proven expertise. He currently holds a crucial role in IPRoyal’s development team, regularly demonstrating his profound expertise in the Go programming language, contributing significantly to the company’s technological evolution. Justas is pivotal in maintaining our proxy network, serving as the authority on all aspects of proxies. Beyond coding, Justas is a passionate travel enthusiast and automotive aficionado, seamlessly blending his tech finesse with a passion for exploration.

Learn More About Justas Vitaitis